8 High-dimensional data

8.1 The Big Picture

-

The rise of high-dimensional data. The new data frontiers in social sciences—text (Gentzkow et al. 2019; Grimmer and Stewart 2013) and image (Joo and Steinert-Threlkeld 2018)—are all high-dimensional data.

1000 common English words for 30-word tweets: \(1000^{30}\) similar to N of atoms in the universe (Gentzkow et al. 2019)

Belloni, Alexandre, Victor Chernozhukov, and Christian Hansen. “High-dimensional methods and inference on structural and treatment effects.” Journal of Economic Perspectives 28, no. 2 (2014): 29-50.

The rise of the new approach: statistics + computer science = machine learning

-

Statistical inference

\(y\) <- some probability models (e.g., linear regression, logistic regression) <- \(x\)

\(y\) = \(X\beta\) + \(\epsilon\)

The goal is to estimate \(\beta\)

-

Machine learning

\(y\) <- unknown <- \(x\)

\(y\) <-> decision trees, neural nets <-> \(x\)

For the main idea behind prediction modeling, see Breiman, Leo (Berkeley stat faculty who passed away in 2005). “Statistical modeling: The two cultures (with comments and a rejoinder by the author).” Statistical science 16, no. 3 (2001): 199-231.

“The problem is to find an algorithm \(f(x)\) such that for future \(x\) in a test set, \(f(x)\) will be a good predictor of \(y\).”

“There are two cultures in the use of statistical modeling to reach conclusions from data. One assumes that the data are generated by a given stochastic data model. The other uses algorithmic models and treats the data mechanism as unknown.”

How ML differs from econometrics?

A review by Athey, Susan, and Guido W. Imbens. “Machine learning methods that economists should know about.” Annual Review of Economics 11 (2019): 685-725.

-

Stat:

Specifying a target (i.e., an estimand)

Fitting a model to data using an objective function (e.g., the sum of squared errors)

Reporting point estimates (effect size) and standard errors (uncertainty)

Validation by yes-no using goodness-of-fit tests and residual examination

-

ML:

Developing algorithms (estimating f(x))

Prediction power, not structural/causal parameters

Basically, high-dimensional data statistics (N < P)

The major problem is to avoid “the curse of dimensionality” (too many features - > overfitting)

Validation: out-of-sample comparisons (cross-validation) not in-sample goodness-of-fit measures

So, it’s curve-fitting, but the primary focus is unseen (test data), not seen data (training data)

-

A quick review on ML lingos for those trained in econometrics

Sample to estimate parameters = Training sample

Estimating the model = Being trained

Regressors, covariates, or predictors = Features

Regression parameters = weights

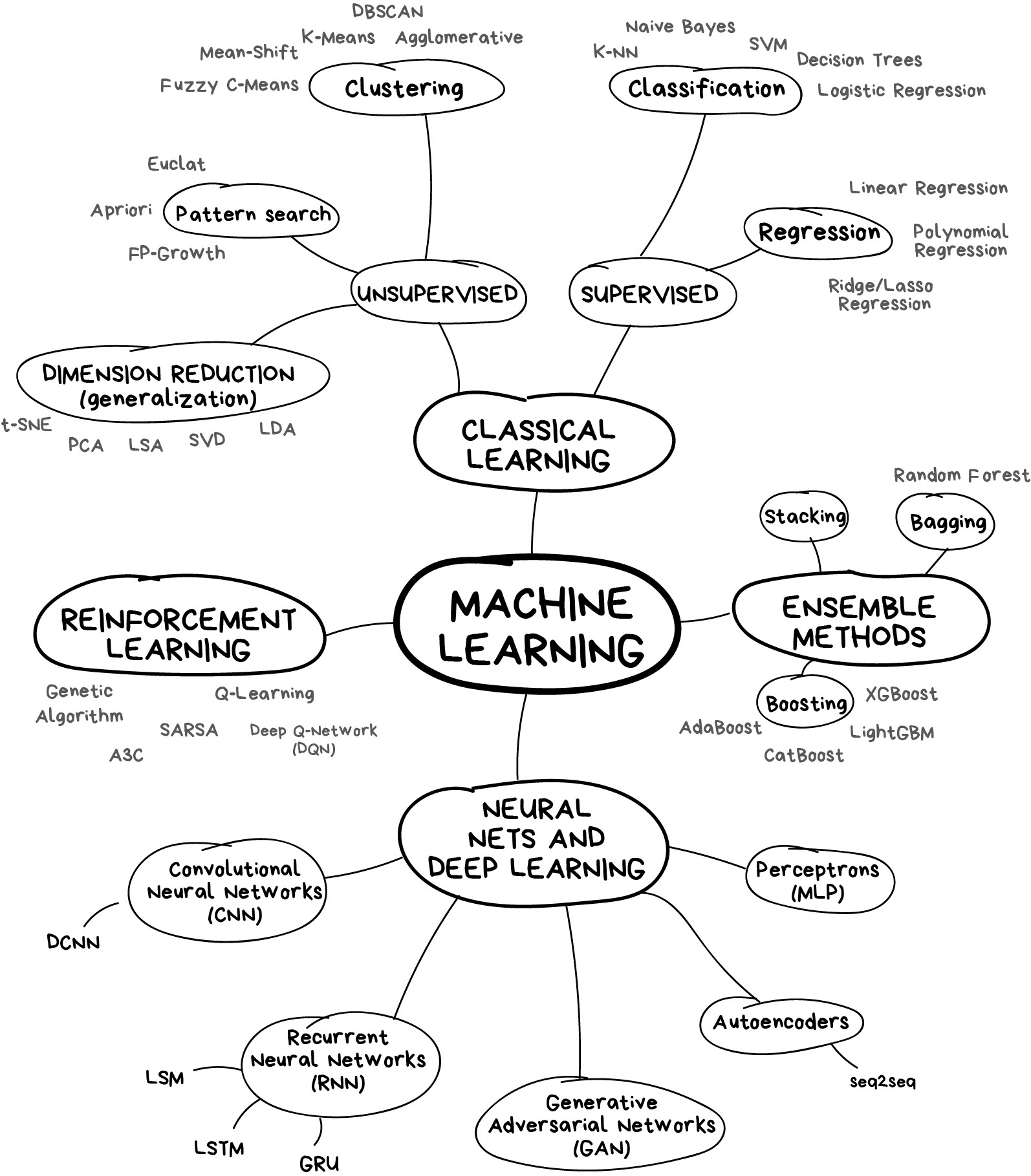

Prediction problems = Supervised (some \(y\) are known) + Unsupervised (\(y\) unknown)

8.2 Dataset

One of the popular datasets used in machine learning competitions

# Install pacman if needed, then load packages

if (!requireNamespace("pacman", quietly = TRUE)) {

install.packages("pacman")

}

pacman::p_load(

here, # Project‐root file paths

tidyverse, # Data manipulation & visualization (dplyr, ggplot2, etc.)

tidymodels, # Modeling ecosystem (parsnip, recipes, yardstick, etc.)

doParallel, # Parallel backend for modeling (foreach, caret, tidymodels)

patchwork, # Compose multiple ggplots into one layout

remotes, # Install packages from GitHub and other remote sources

nnls, # Non-negative least squares (required by SuperLearner)

gam, # Generalized additive models (required by SuperLearner)

SuperLearner, # Ensemble learning via stacked generalization

vip, # Variable importance plots (model‐agnostic)

glmnet, # Regularized regression (lasso, ridge, elastic net)

finetune, # Advanced hyperparameter tuning (race methods, etc.)

glue, # String interpolation

xgboost, # Gradient boosting (XGBoost)

rpart, # Recursive partitioning trees

ranger, # Fast random forests with C++ backend

corrr, # Correlation analysis

tm, # Text mining (required by stm)

SnowballC, # Stemming (required by stm)

conflicted # Explicit conflict resolution for masking functions

)

conflicted::conflict_prefer("filter", "dplyr")

## Jae's custom functions

source(here("functions", "ml_utils.r"))

# Import the dataset

data_original <- read_csv(here("data", "heart.csv"))

glimpse(data_original)## Rows: 303

## Columns: 14

## $ age <dbl> 63, 37, 41, 56, 57, 57, 56, 44, 52, 57, 54, 48, 49, 64, 58, 5…

## $ sex <dbl> 1, 1, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1…

## $ cp <dbl> 3, 2, 1, 1, 0, 0, 1, 1, 2, 2, 0, 2, 1, 3, 3, 2, 2, 3, 0, 3, 0…

## $ trestbps <dbl> 145, 130, 130, 120, 120, 140, 140, 120, 172, 150, 140, 130, 1…

## $ chol <dbl> 233, 250, 204, 236, 354, 192, 294, 263, 199, 168, 239, 275, 2…

## $ fbs <dbl> 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0…

## $ restecg <dbl> 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 1…

## $ thalach <dbl> 150, 187, 172, 178, 163, 148, 153, 173, 162, 174, 160, 139, 1…

## $ exang <dbl> 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0…

## $ oldpeak <dbl> 2.3, 3.5, 1.4, 0.8, 0.6, 0.4, 1.3, 0.0, 0.5, 1.6, 1.2, 0.2, 0…

## $ slope <dbl> 0, 0, 2, 2, 2, 1, 1, 2, 2, 2, 2, 2, 2, 1, 2, 1, 2, 0, 2, 2, 1…

## $ ca <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 0…

## $ thal <dbl> 1, 2, 2, 2, 2, 1, 2, 3, 3, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3…

## $ target <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

# Create a copy

data <- data_original

theme_set(theme_minimal())8.4 tidymodels

-

Like

tidyverse,tidymodelsis a collection of packages. Why taking a tidyverse approach to machine learning?

-

Benefits

Readable code

Reusable data structures

Extendable code

tidymodels are an integrated, modular, extensible set of packages that implement a framework that facilitates creating predicative stochastic models. - Joseph Rickert@RStudio

Currently, 238 models are available

The following materials are based on the machine learning with tidymodels workshop I developed for D-Lab. The original workshop was designed by Chris Kennedy and Evan Muzzall.

8.5 Pre-processing

recipes: for pre-processingtextrecipesfor text pre-processingStep 1:

recipe()defines target and predictor variables (ingredients).-

Step 2:

step_*()defines preprocessing steps to be taken (recipe).The preprocessing steps list draws on the vignette of the

parsnippackage.dummy: Also called one-hot encoding

zero variance: Removing columns (or features) with a single unique value

impute: Imputing missing values

decorrelate: Mitigating correlated predictors (e.g., principal component analysis)

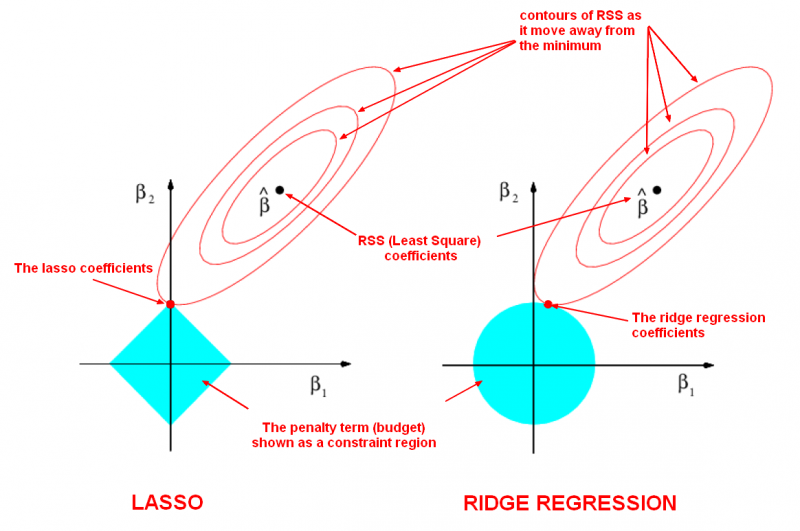

normalize: Centering and/or scaling predictors (e.g., log scaling). Scaling matters because many algorithms (e.g., lasso) are scale-variant (except tree-based algorithms). Remind you that normalization (sensitive to outliers) = \(\frac{X - X_{min}}{X_{max} - X_{min}}\) and standardization (not sensitive to outliers) = \(\frac{X - \mu}{\sigma}\)

transform: Making predictors symmetric

Step 3:

prep()prepares a dataset to base each step on.Step 4:

bake()applies the preprocessing steps to your datasets.

In this course, we focus on two preprocessing tasks.

- One-hot encoding (creating dummy/indicator variables)

# Turn selected numeric variables into factor variables

data <- data %>%

dplyr::mutate(across(c("sex", "ca", "cp", "slope", "thal"), as.factor))

glimpse(data)## Rows: 303

## Columns: 14

## $ age <dbl> 63, 37, 41, 56, 57, 57, 56, 44, 52, 57, 54, 48, 49, 64, 58, 5…

## $ sex <fct> 1, 1, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1…

## $ cp <fct> 3, 2, 1, 1, 0, 0, 1, 1, 2, 2, 0, 2, 1, 3, 3, 2, 2, 3, 0, 3, 0…

## $ trestbps <dbl> 145, 130, 130, 120, 120, 140, 140, 120, 172, 150, 140, 130, 1…

## $ chol <dbl> 233, 250, 204, 236, 354, 192, 294, 263, 199, 168, 239, 275, 2…

## $ fbs <dbl> 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0…

## $ restecg <dbl> 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 1…

## $ thalach <dbl> 150, 187, 172, 178, 163, 148, 153, 173, 162, 174, 160, 139, 1…

## $ exang <dbl> 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0…

## $ oldpeak <dbl> 2.3, 3.5, 1.4, 0.8, 0.6, 0.4, 1.3, 0.0, 0.5, 1.6, 1.2, 0.2, 0…

## $ slope <fct> 0, 0, 2, 2, 2, 1, 1, 2, 2, 2, 2, 2, 2, 1, 2, 1, 2, 0, 2, 2, 1…

## $ ca <fct> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 0…

## $ thal <fct> 1, 2, 2, 2, 2, 1, 2, 3, 3, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3…

## $ target <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…- Imputation

## # A tibble: 1 × 14

## age sex cp trestbps chol fbs restecg thalach exang oldpeak slope

## <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int>

## 1 0 0 0 0 0 0 0 0 0 0 0

## # ℹ 3 more variables: ca <int>, thal <int>, target <int>

# Add missing values

data$oldpeak[sample(seq(data), size = 10)] <- NA

# Check missing values

# Check the number of missing values

data %>%

map_df(~ is.na(.) %>% sum())## # A tibble: 1 × 14

## age sex cp trestbps chol fbs restecg thalach exang oldpeak slope

## <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int>

## 1 0 0 0 0 0 0 0 0 0 10 0

## # ℹ 3 more variables: ca <int>, thal <int>, target <int>## # A tibble: 1 × 14

## age sex cp trestbps chol fbs restecg thalach exang oldpeak slope

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0 0 0 0 0 0 0 0 0 0.0330 0

## # ℹ 3 more variables: ca <dbl>, thal <dbl>, target <dbl>8.5.1 Regression setup

8.5.1.2 Data splitting using random sampling

# for reproducibility

set.seed(1234)

# split

split_reg <- initial_split(data, prop = 0.7)

# training set

raw_train_x_reg <- training(split_reg)

# test set

raw_test_x_reg <- testing(split_reg)8.5.1.3 recipe

# Regression recipe

rec_reg <- raw_train_x_reg %>%

# Define the outcome variable

recipe(age ~ .) %>%

# Median impute oldpeak column

step_impute_median(oldpeak) %>%

# Expand "sex", "ca", "cp", "slope", and "thal" features out into dummy variables (indicators).

step_dummy(c("sex", "ca", "cp", "slope", "thal"))

# Prepare a dataset to base each step on

prep_reg <- rec_reg %>% prep(retain = TRUE)

# x features

train_x_reg <- juice(prep_reg, all_predictors())

test_x_reg <- bake(

object = prep_reg,

new_data = raw_test_x_reg, all_predictors()

)

# y variables

train_y_reg <- juice(prep_reg, all_outcomes())$age %>% as.numeric()

test_y_reg <- bake(prep_reg, raw_test_x_reg, all_outcomes())$age %>% as.numeric()

# Checks

names(train_x_reg) # Make sure there's no age variable!

class(train_y_reg) # Make sure this is a continuous variable!- Note that other imputation methods are also available.

- You can also create your own

step_functions. For more information, see tidymodels.org.

8.5.2 Classification setup

8.5.2.3 recipe

# Classification recipe

rec_class <- raw_train_x_class %>%

# Define the outcome variable

recipe(target ~ .) %>%

# Median impute oldpeak column

step_impute_median(oldpeak) %>%

# Expand "sex", "ca", "cp", "slope", and "thal" features out into dummy variables (indicators).

step_normalize(age) %>%

step_dummy(c("sex", "ca", "cp", "slope", "thal"))

# Prepare a dataset to base each step on

prep_class <- rec_class %>% prep(retain = TRUE)

# x features

train_x_class <- juice(prep_class, all_predictors())

test_x_class <- bake(prep_class, raw_test_x_class, all_predictors())

# y variables

train_y_class <- juice(prep_class, all_outcomes())$target %>% as.factor()

test_y_class <- bake(prep_class, raw_test_x_class, all_outcomes())$target %>% as.factor()

# Checks

names(train_x_class) # Make sure there's no target variable!

class(train_y_class) # Make sure this is a factor variable!8.6 Supervised learning

x -> f - > y (defined)

8.6.1 OLS and Lasso

8.6.1.1 parsnip

- Build models (

parsnip)

- Specify a model

- Specify an engine

- Specify a mode

# OLS spec

ols_spec <- linear_reg() %>% # Specify a model

set_engine("lm") %>% # Specify an engine: lm, glmnet, stan, keras, spark

set_mode("regression") # Declare a mode: regression or classification

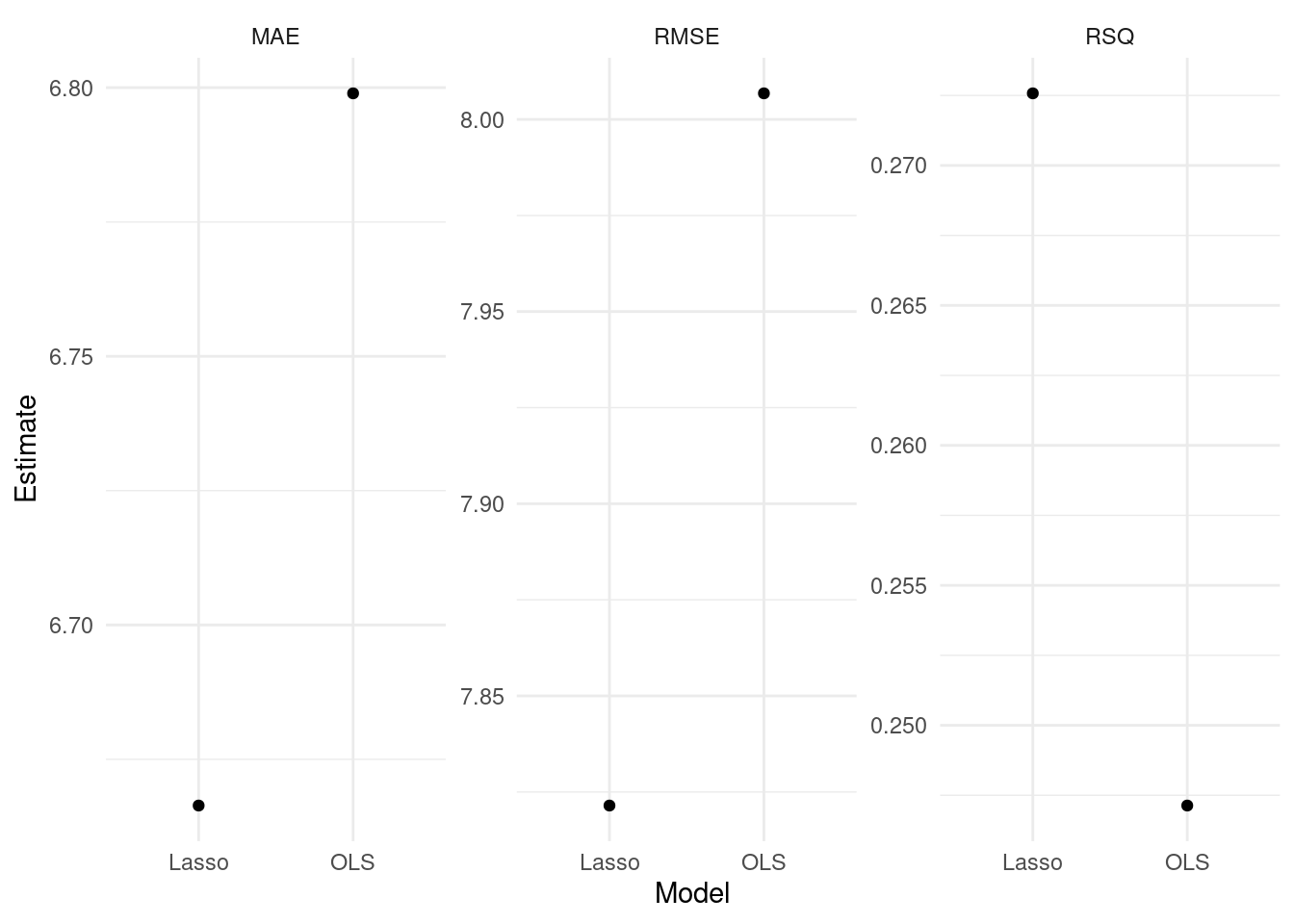

Lasso is one of the regularization techniques along with ridge and elastic-net.

# Lasso spec

lasso_spec <- linear_reg(

penalty = 0.1, # tuning hyperparameter

mixture = 1

) %>% # 1 = lasso, 0 = ridge

set_engine("glmnet") %>%

set_mode("regression")

# If you don't understand parsnip arguments

lasso_spec %>% translate() # See the documentation- Fit models

8.6.1.2 yardstick

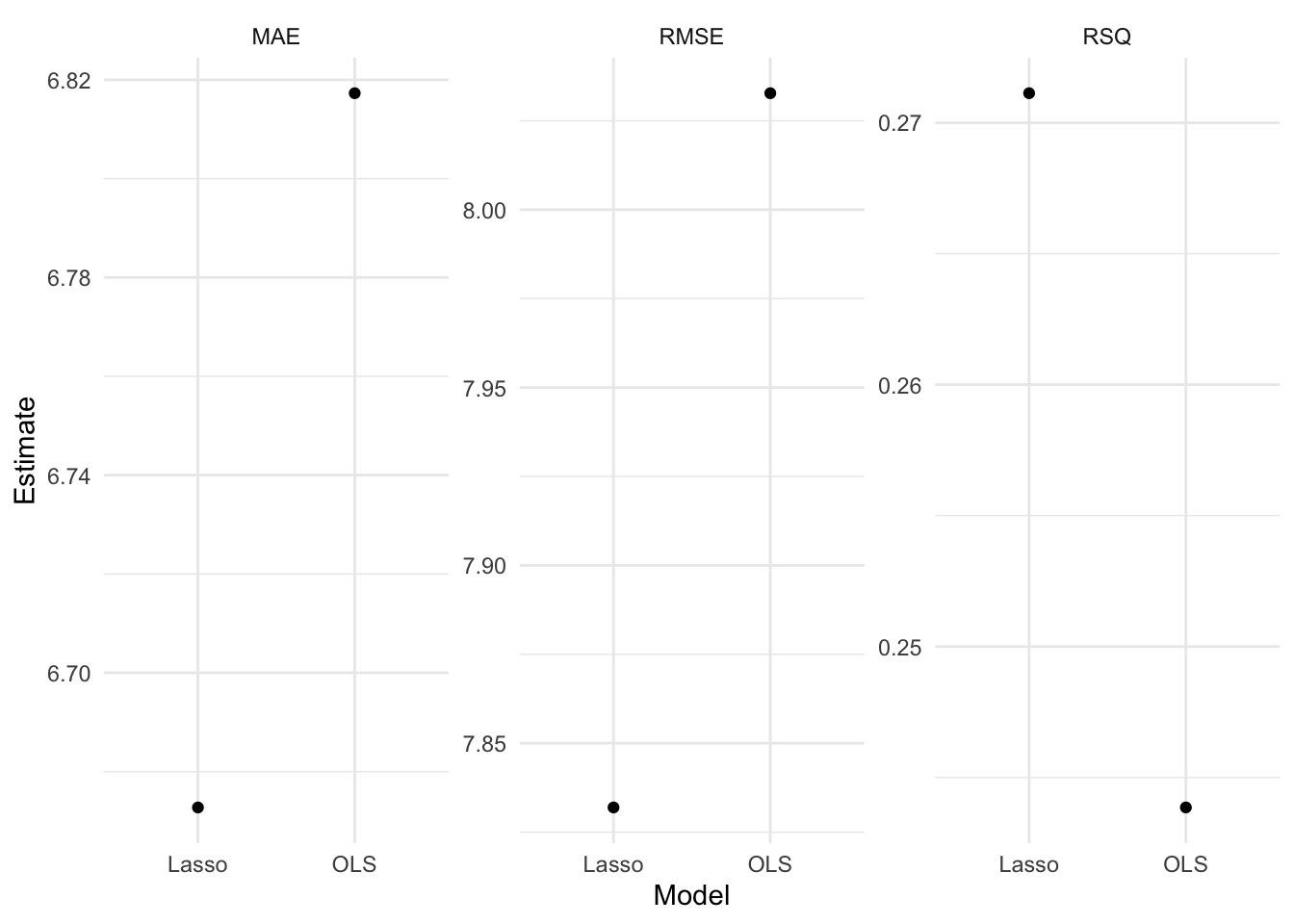

- Visualize model fits

## [[1]]

##

## [[2]]

# Define performance metrics

metrics <- yardstick::metric_set(rmse, mae, rsq)

# Evaluate many models

evals <- purrr::map(list(ols_fit, lasso_fit), evaluate_reg) %>%

reduce(bind_rows) %>%

mutate(type = rep(c("OLS", "Lasso"), each = 3))

# Visualize the test results

evals %>%

ggplot(aes(x = type, y = .estimate)) +

geom_point() +

labs(

x = "Model",

y = "Estimate"

) +

facet_wrap(~ glue("{toupper(.metric)}"), scales = "free_y") - For more information, read Tidy Modeling with R by Max Kuhn and Julia Silge.

- For more information, read Tidy Modeling with R by Max Kuhn and Julia Silge.

8.6.1.3 tune

Hyperparameters are parameters that control the learning process.

8.6.1.3.1 tune ingredients

- Search space for hyperparameters

Grid search: a grid of hyperparameters

Random search: random sample points from a bounded domain

# tune() = placeholder

tune_spec <- linear_reg(

penalty = tune(), # tuning hyperparameter

mixture = 1

) %>% # 1 = lasso, 0 = ridge

set_engine("glmnet") %>%

set_mode("regression")

# penalty() searches 50 possible combinations

lambda_grid <- grid_regular(penalty(), levels = 50)

8.6.1.3.2 Add these elements to a workflow

# Tuning results

rec_res <- rec_wf %>%

tune_grid(

resamples = rec_folds,

grid = lambda_grid,

control = control_grid(parallel_over = "resamples")

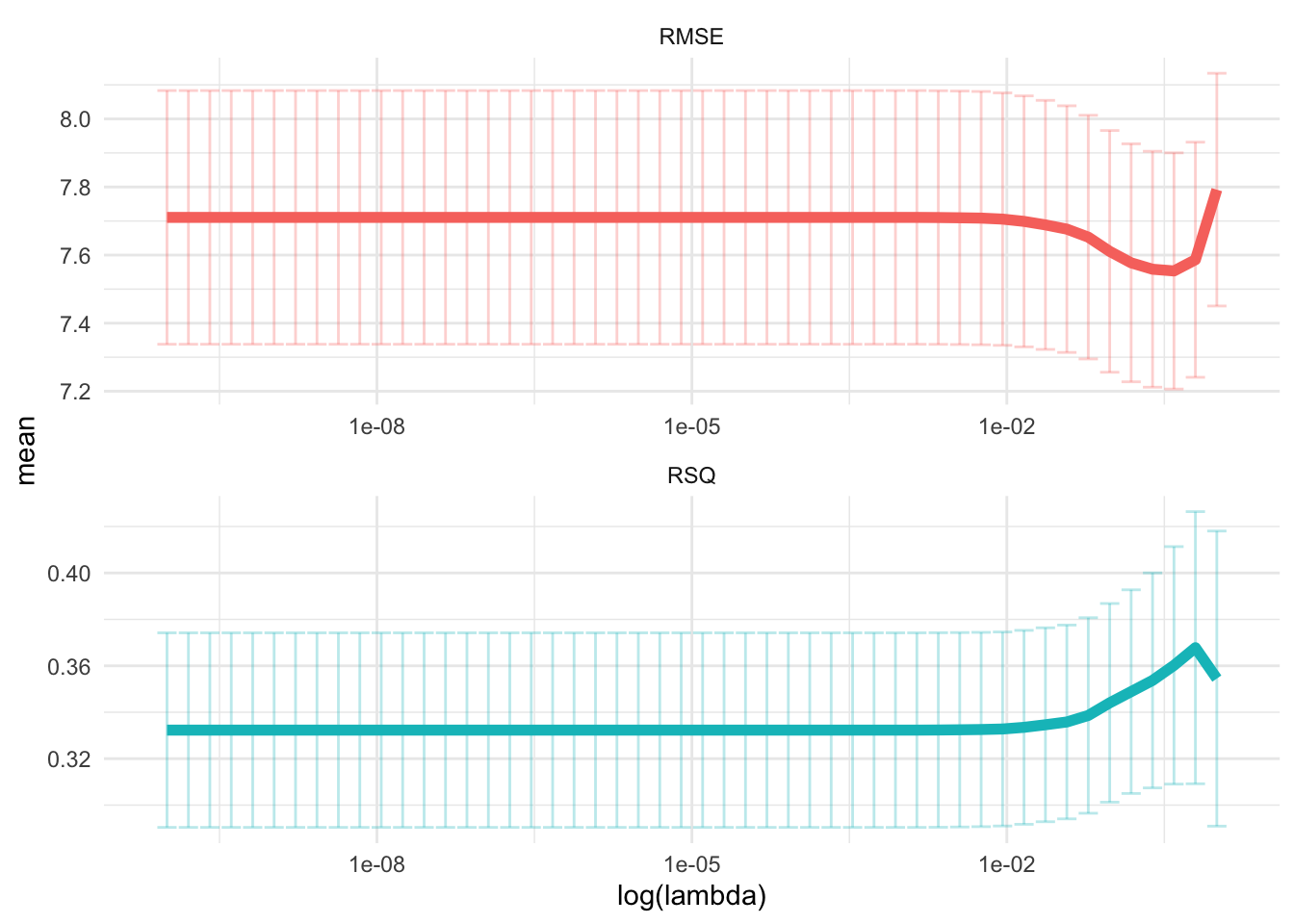

)8.6.1.3.3 Visualize

# Visualize

rec_res %>%

collect_metrics() %>%

ggplot(aes(penalty, mean, col = .metric)) +

geom_errorbar(aes(

ymin = mean - std_err,

ymax = mean + std_err

),

alpha = 0.3

) +

geom_line(size = 2) +

scale_x_log10() +

labs(x = "log(lambda)") +

facet_wrap(~ glue("{toupper(.metric)}"),

scales = "free",

nrow = 2

) +

theme(legend.position = "none")

8.6.1.3.4 Select

conflict_prefer("filter", "dplyr")

top_rmse <- show_best(rec_res, metric = "rmse")

best_rmse <- select_best(rec_res, metric = "rmse")

glue('The RMSE of the intiail model is

{evals %>%

filter(type == "Lasso", .metric == "rmse") %>%

select(.estimate) %>%

round(2)}')## The RMSE of the intiail model is

## 7.81

glue('The RMSE of the tuned model is {rec_res %>%

collect_metrics() %>%

filter(.metric == "rmse") %>%

arrange(mean) %>%

dplyr::slice(1) %>%

select(mean) %>%

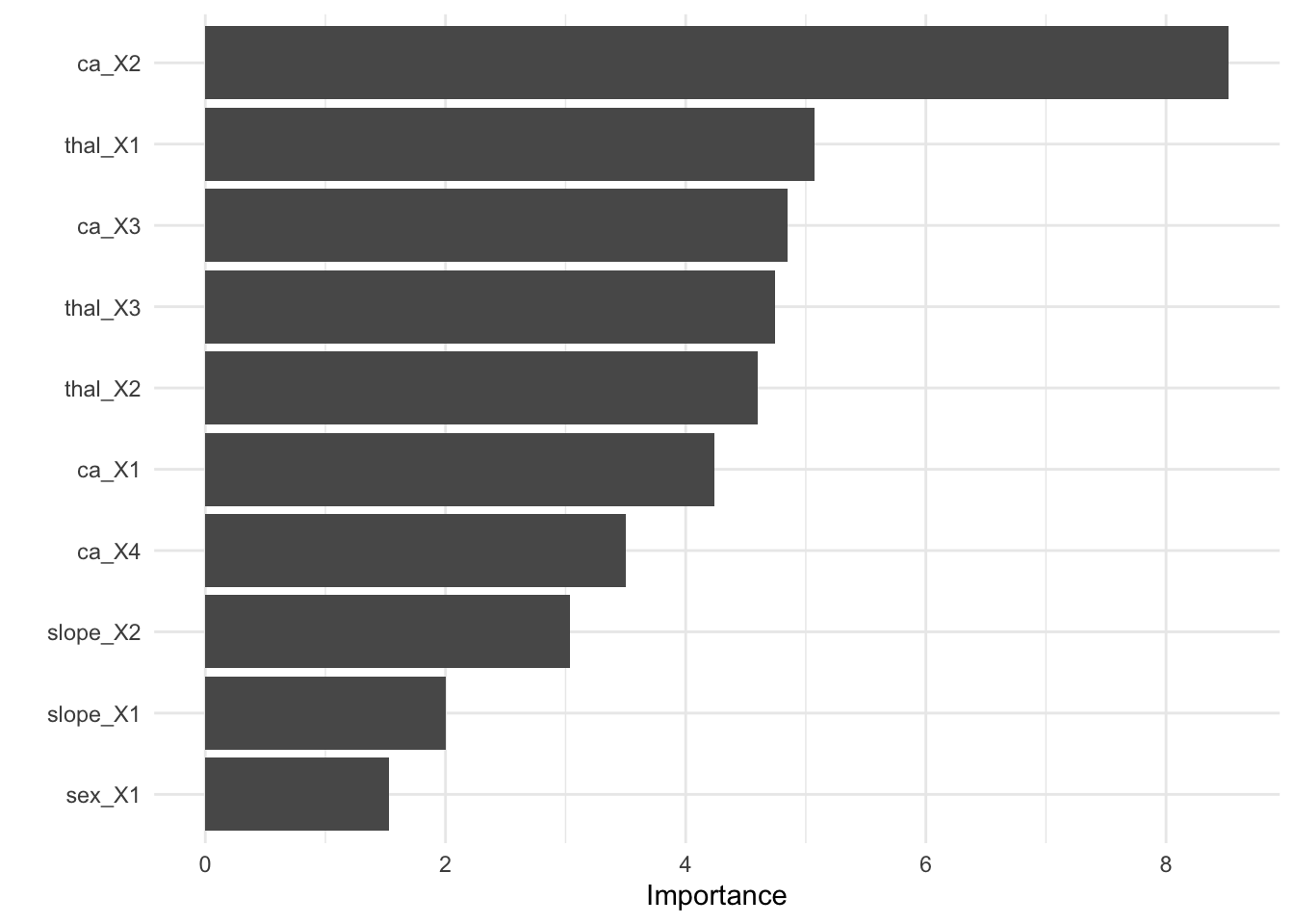

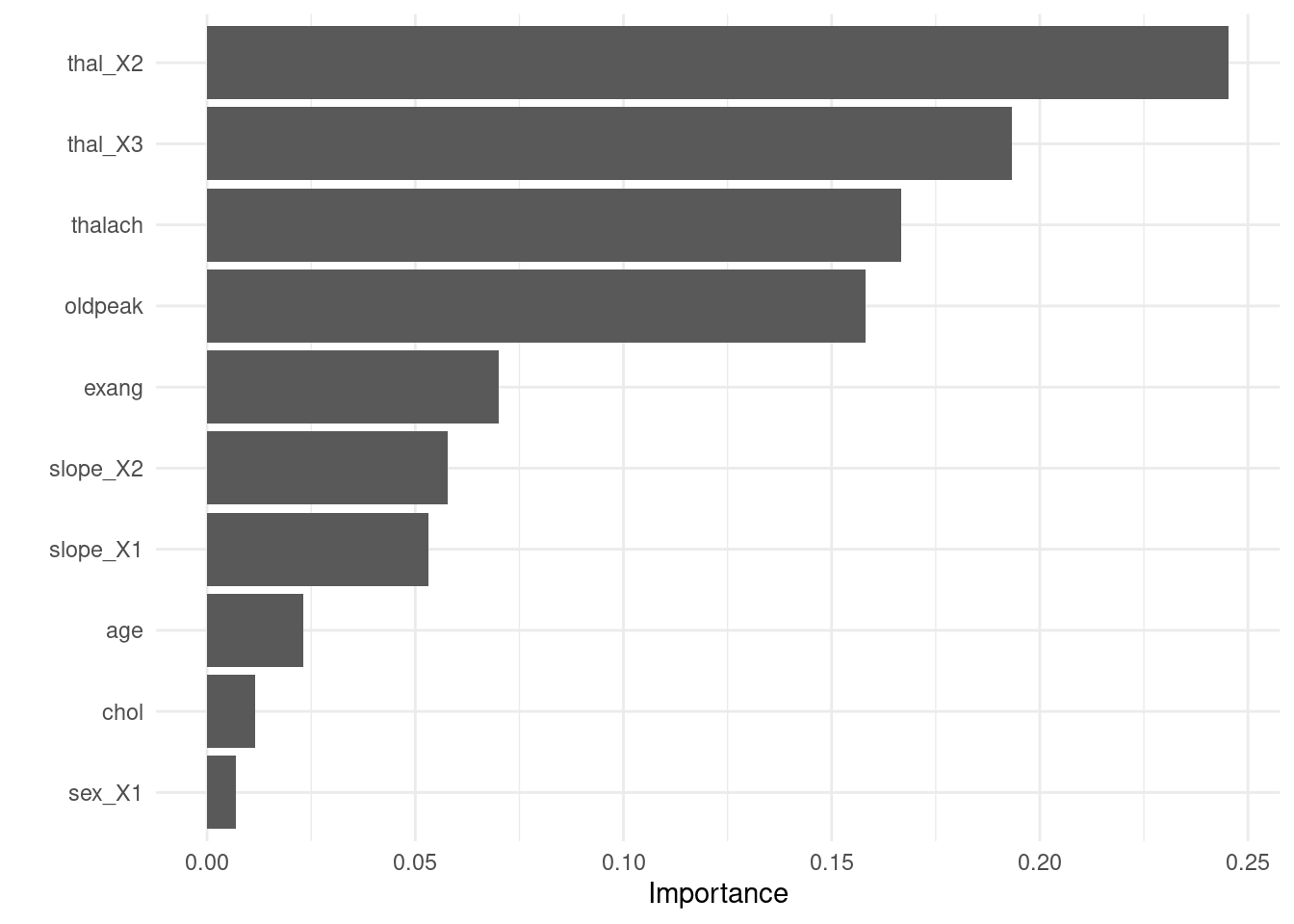

round(2)}')## The RMSE of the tuned model is 7.55- Finalize your workflow and visualize variable importance

finalize_lasso <- rec_wf %>%

finalize_workflow(best_rmse)

finalize_lasso %>%

fit(train_x_reg %>% bind_cols(tibble(age = train_y_reg))) %>%

pull_workflow_fit() %>%

vip::vip()

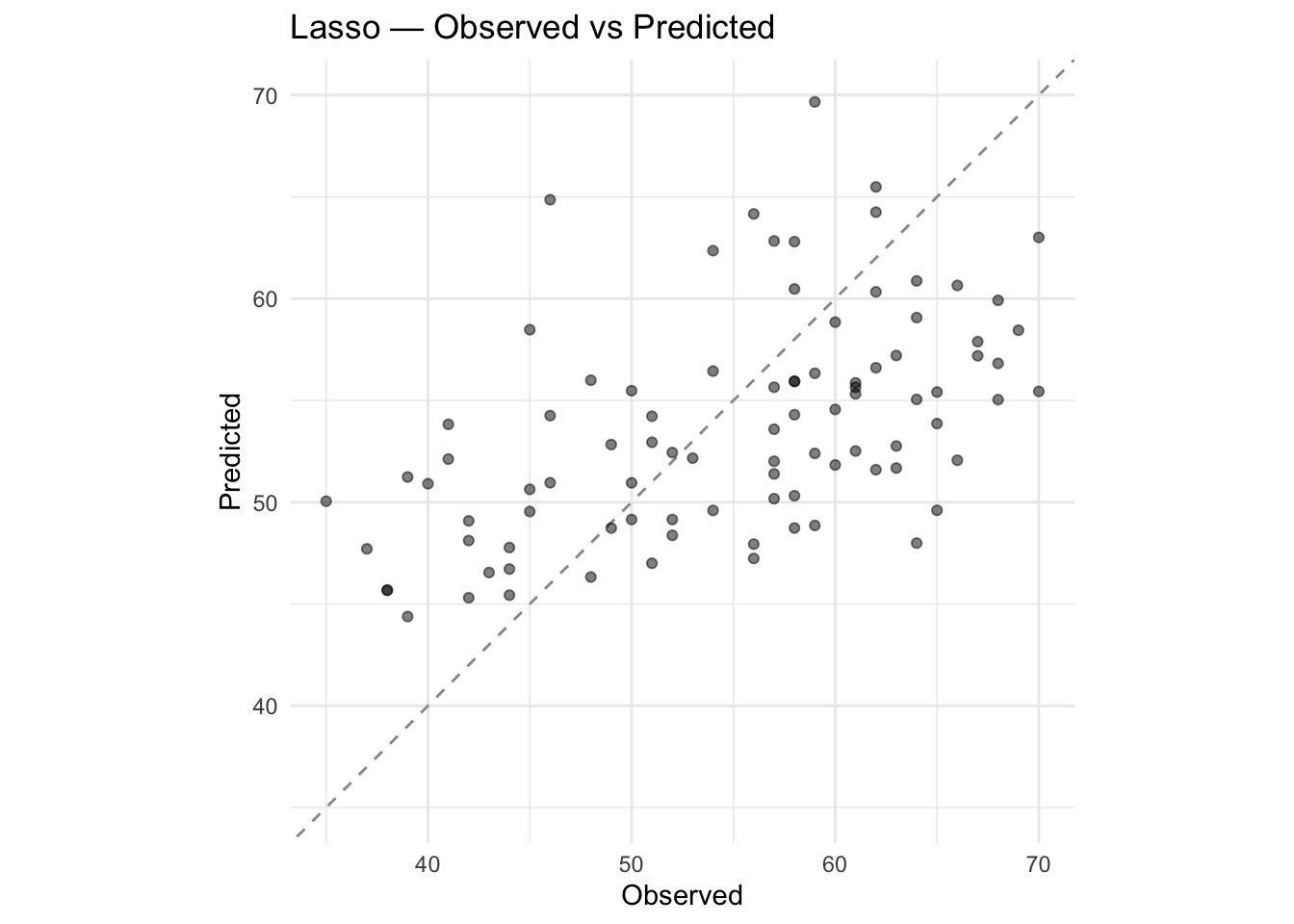

8.6.1.3.5 Test fit

- Apply the tuned model to the test dataset

test_fit <- finalize_lasso %>%

fit(test_x_reg %>% bind_cols(tibble(age = test_y_reg)))

evaluate_reg(test_fit)## # A tibble: 3 × 3

## .metric .estimator .estimate

## <chr> <chr> <dbl>

## 1 rmse standard 7.11

## 2 mae standard 5.83

## 3 rsq standard 0.3978.6.2 Decision tree

8.6.2.1 parsnip

- Build a model

- Specify a model

- Specify an engine

- Specify a mode

# workflow

tree_wf <- workflow() %>% add_formula(target ~ .)

# spec

tree_spec <- decision_tree(

# Mode

mode = "classification",

# Tuning hyperparameters

cost_complexity = NULL,

tree_depth = NULL

) %>%

set_engine("rpart") # rpart, c5.0, spark

tree_wf <- tree_wf %>% add_model(tree_spec)- Fit a model

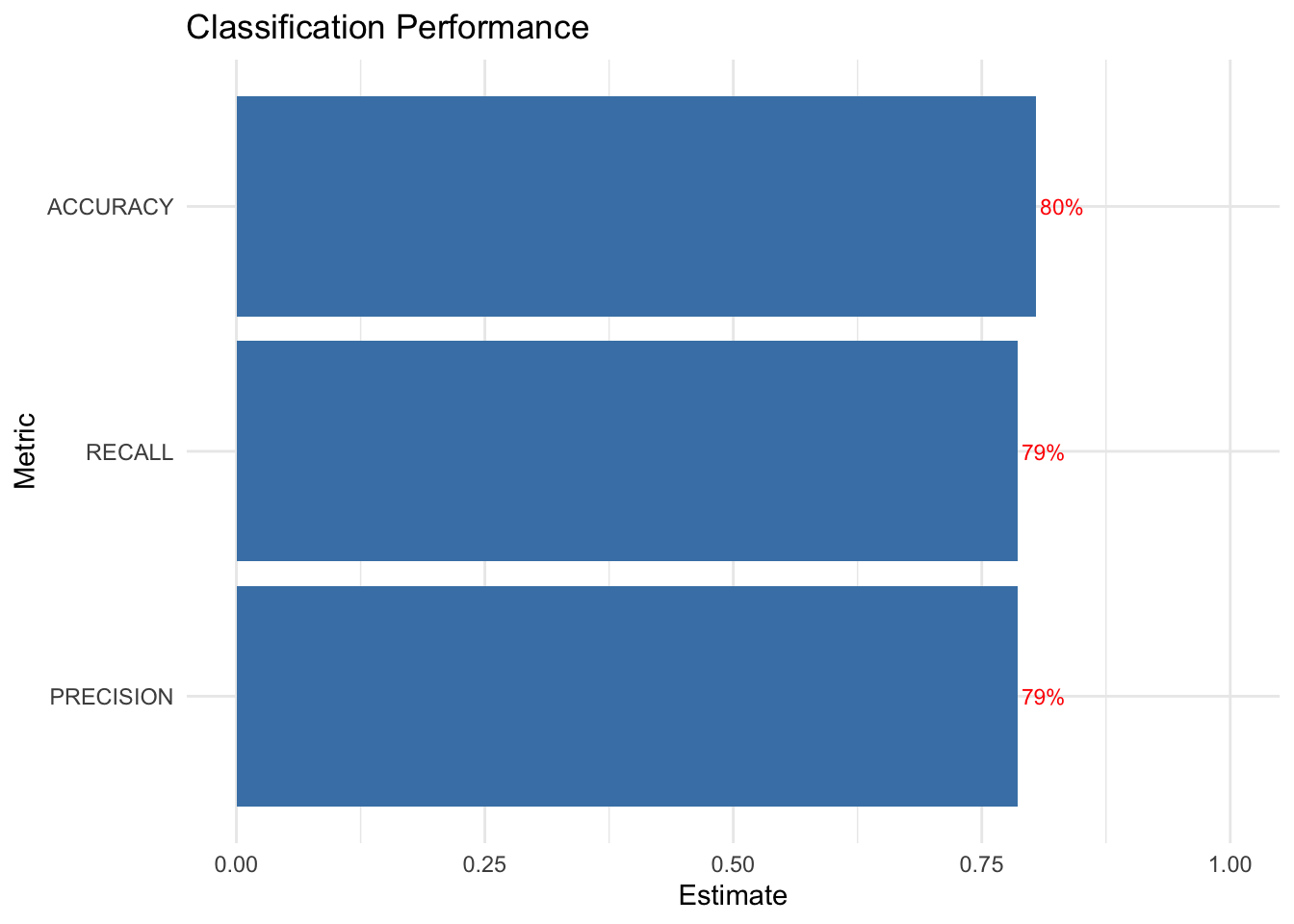

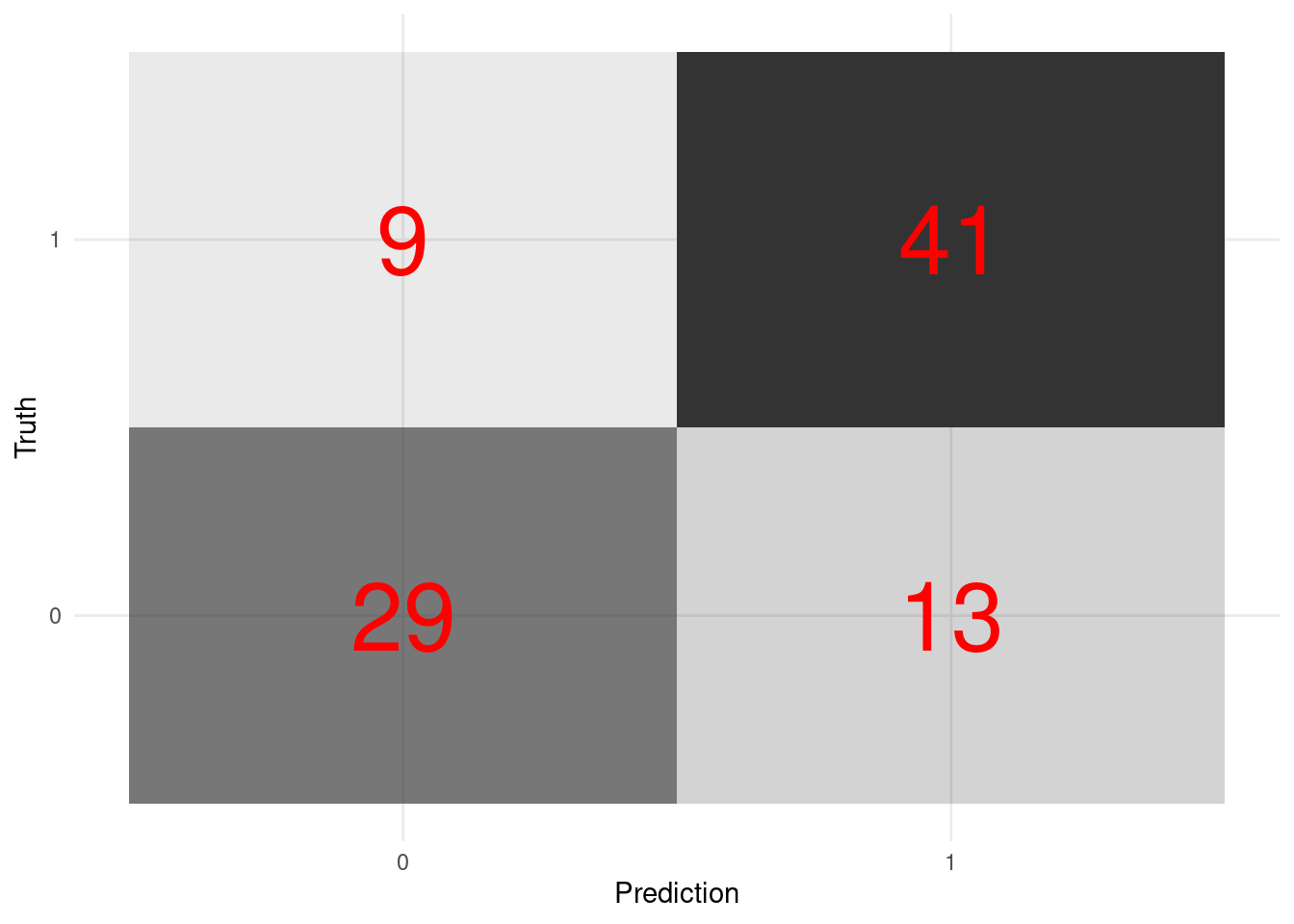

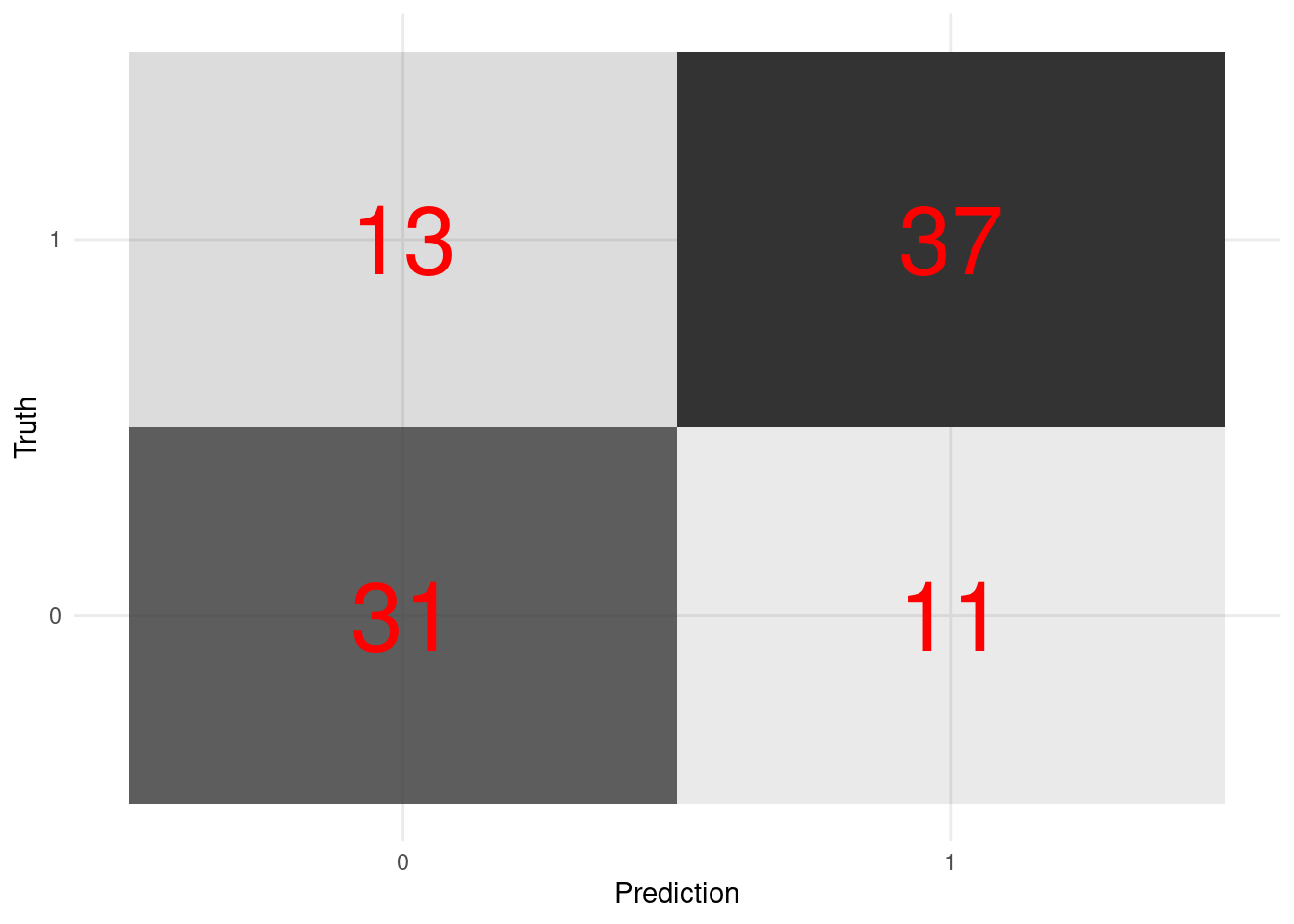

8.6.2.2 yardstick

- Let’s formally test prediction performance.

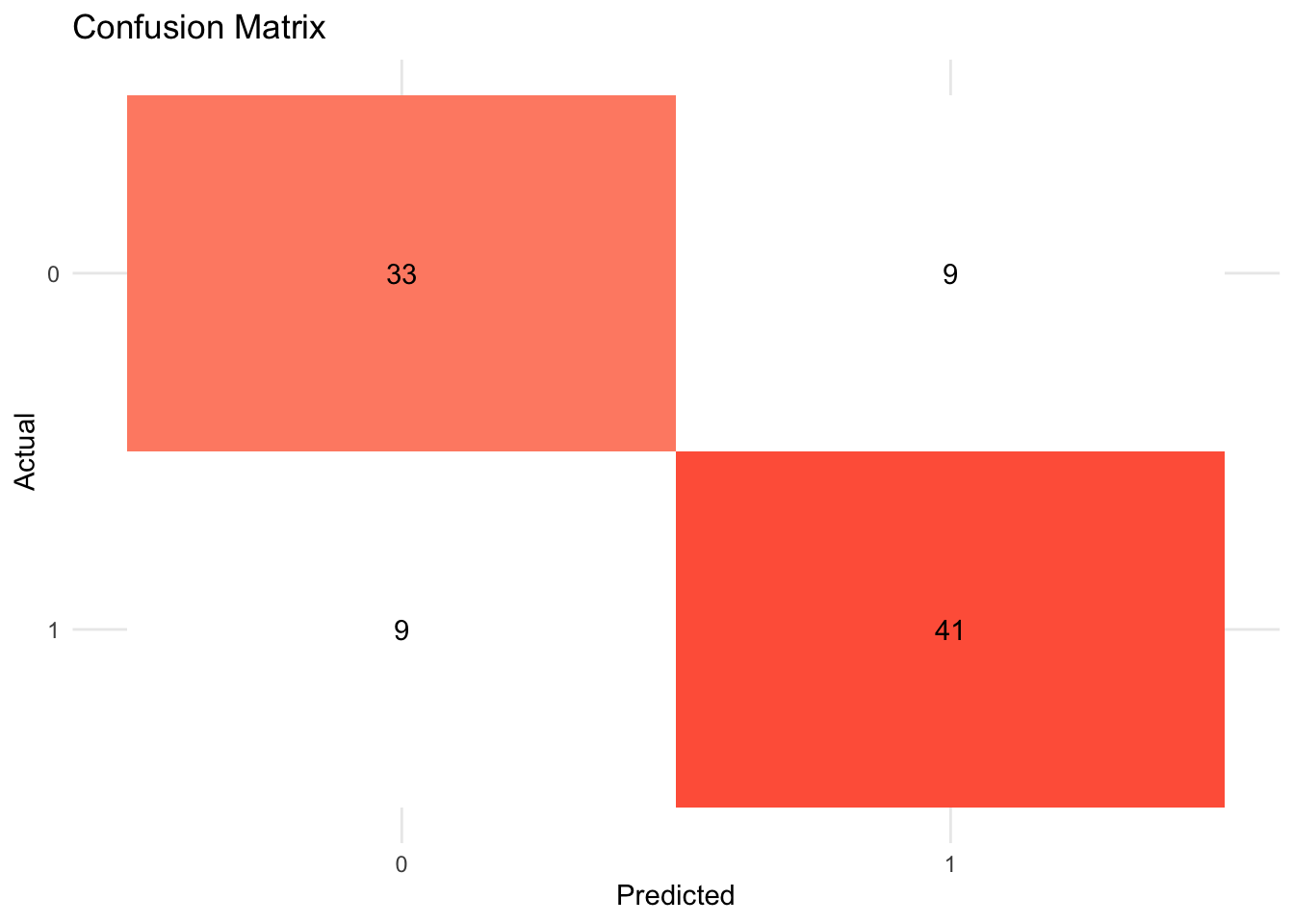

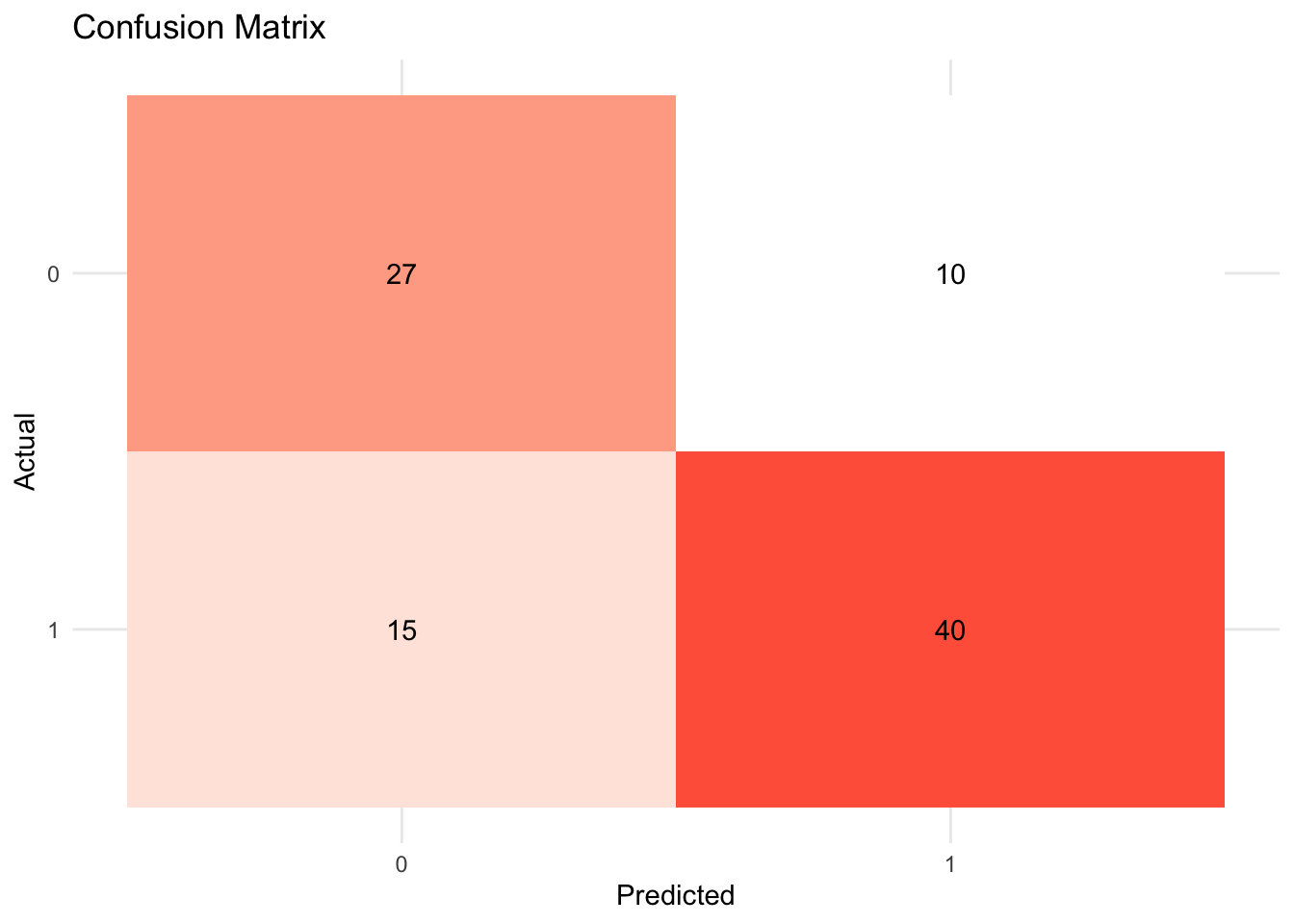

- Confusion matrix

A confusion matrix is often used to describe the performance of a classification model. The below example is based on a binary classification model.

| Predicted: YES | Predicted: NO | |

|---|---|---|

| Actual: YES | True positive (TP) | False negative (FN) |

| Actual: NO | False positive (FP) | True negative (TN) |

- Metrics

accuracy: The proportion of the data predicted correctly (\(\frac{TP + TN}{total}\)). 1 - accuracy = misclassification rate.precision: Positive predictive value. When the model predicts yes, how correct is it? (\(\frac{TP}{TP + FP}\))recall(sensitivity): True positive rate (e.g., healthy people healthy). When the actual value is yes, how often does the model predict yes? (\(\frac{TP}{TP + FN}\))F-score: A weighted average between precision and recall.ROC Curve(receiver operating characteristic curve): a plot that shows the relationship between true and false positive rates at different classification thresholds. y-axis indicates the true positive rate and x-axis indicates the false positive rate. What matters is the AUC (Area under the ROC Curve), which is a cumulative probability function of ranking a random “positive” - “negative” pair (for the probability of AUC, see this blog post).

- To learn more about other metrics, check out the yardstick package references.

# Define performance metrics

metrics <- yardstick::metric_set(accuracy, precision, recall)

# Visualize

tree_fit_viz_metr <- visualize_class_eval(tree_fit)

tree_fit_viz_metr

tree_fit_viz_mat <- visualize_class_conf(tree_fit)

tree_fit_viz_mat

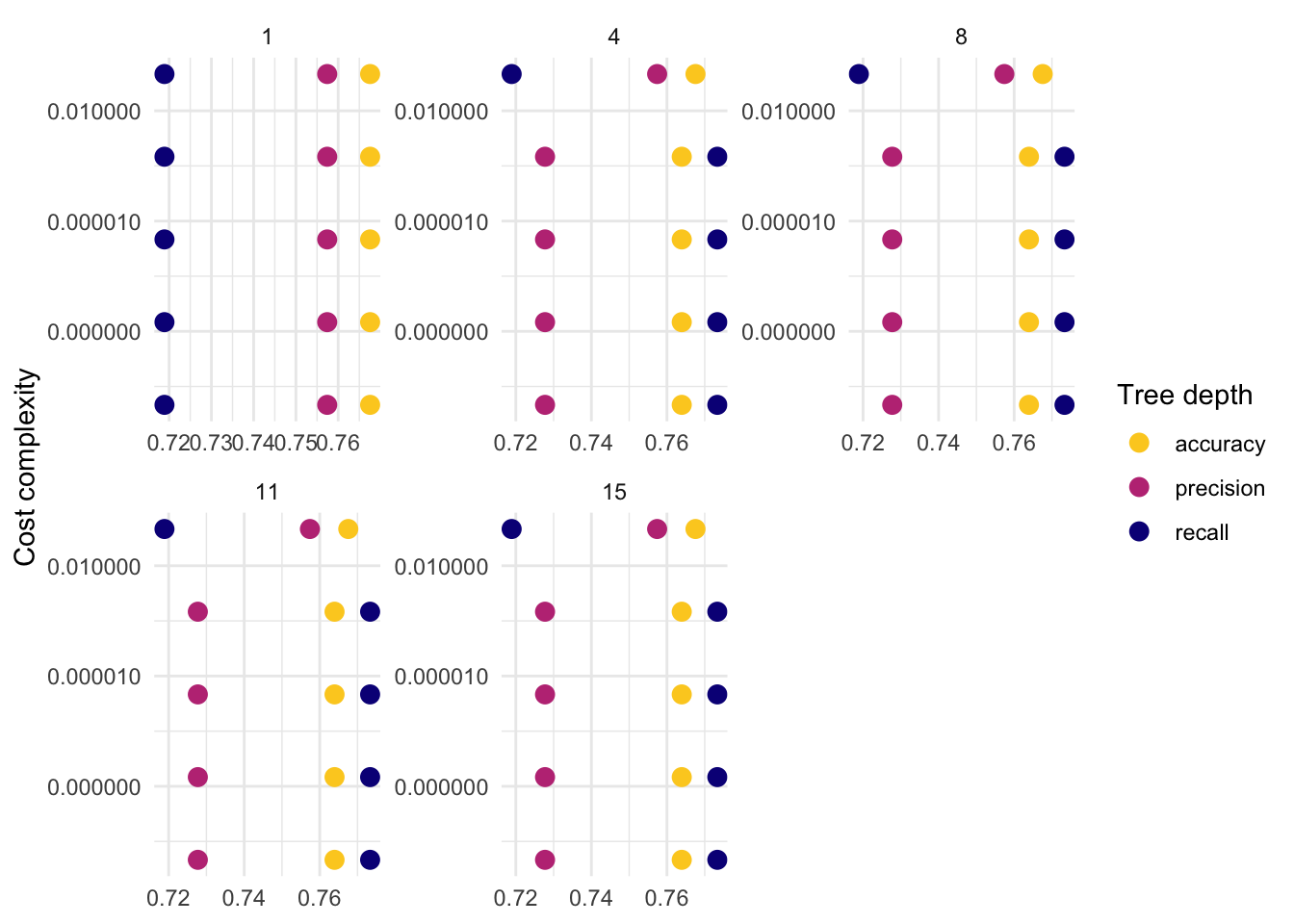

8.6.2.3 tune

8.6.2.3.1 tune ingredients

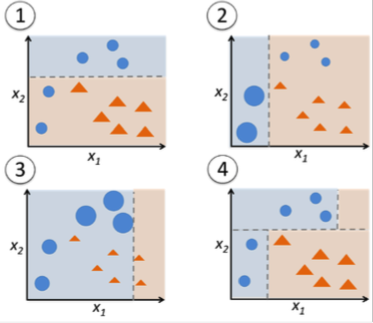

Decisions trees tend to overfit. We need to consider two things to reduce this problem: how to split and when to stop a tree.

complexity parameter: a high CP means a simple decision tree with few splits.

tree_depth

tune_spec <- decision_tree(

cost_complexity = tune(), # how to split

tree_depth = tune(), # when to stop

mode = "classification"

) %>%

set_engine("rpart")

tree_grid <- grid_regular(cost_complexity(),

tree_depth(),

levels = 5

) # 2 hyperparameters -> 5*5 = 25 combinations

# 10-fold cross-validation

set.seed(1234) # for reproducibility

tree_folds <- vfold_cv(train_x_class %>% bind_cols(tibble(target = train_y_class)),

strata = target

)8.6.2.3.2 Add these elements to a workflow

# Update workflow

tree_wf <- tree_wf %>% update_model(tune_spec)

# Determine the number of cores

no_cores <- detectCores() - 1

# Initiate

cl <- makeCluster(no_cores)

registerDoParallel(cl)

# Tuning results

tree_res <- tree_wf %>%

tune_grid(

resamples = tree_folds,

grid = tree_grid,

metrics = metrics

)8.6.2.3.3 Visualize

- The following plot draws on the vignette of the tidymodels package.

tree_res %>%

collect_metrics() %>%

mutate(tree_depth = factor(tree_depth)) %>%

ggplot(aes(cost_complexity, mean, col = .metric)) +

geom_point(size = 3) +

# Subplots

facet_wrap(~tree_depth,

scales = "free",

nrow = 2

) +

# Log scale x

scale_x_log10(labels = scales::label_number()) +

# Discrete color scale

scale_color_viridis_d(option = "plasma", begin = .9, end = 0) +

labs(

x = "Cost complexity",

col = "Tree depth",

y = NULL

) +

coord_flip()

8.6.2.3.4 Select

# Optimal hyperparameter

best_tree <- tune::select_best(tree_res, metric = "recall")

# Add the hyperparameter to the workflow

finalize_tree <- tree_wf %>%

finalize_workflow(best_tree)

tree_fit_tuned <- finalize_tree %>%

fit(train_x_class %>% bind_cols(tibble(target = train_y_class)))

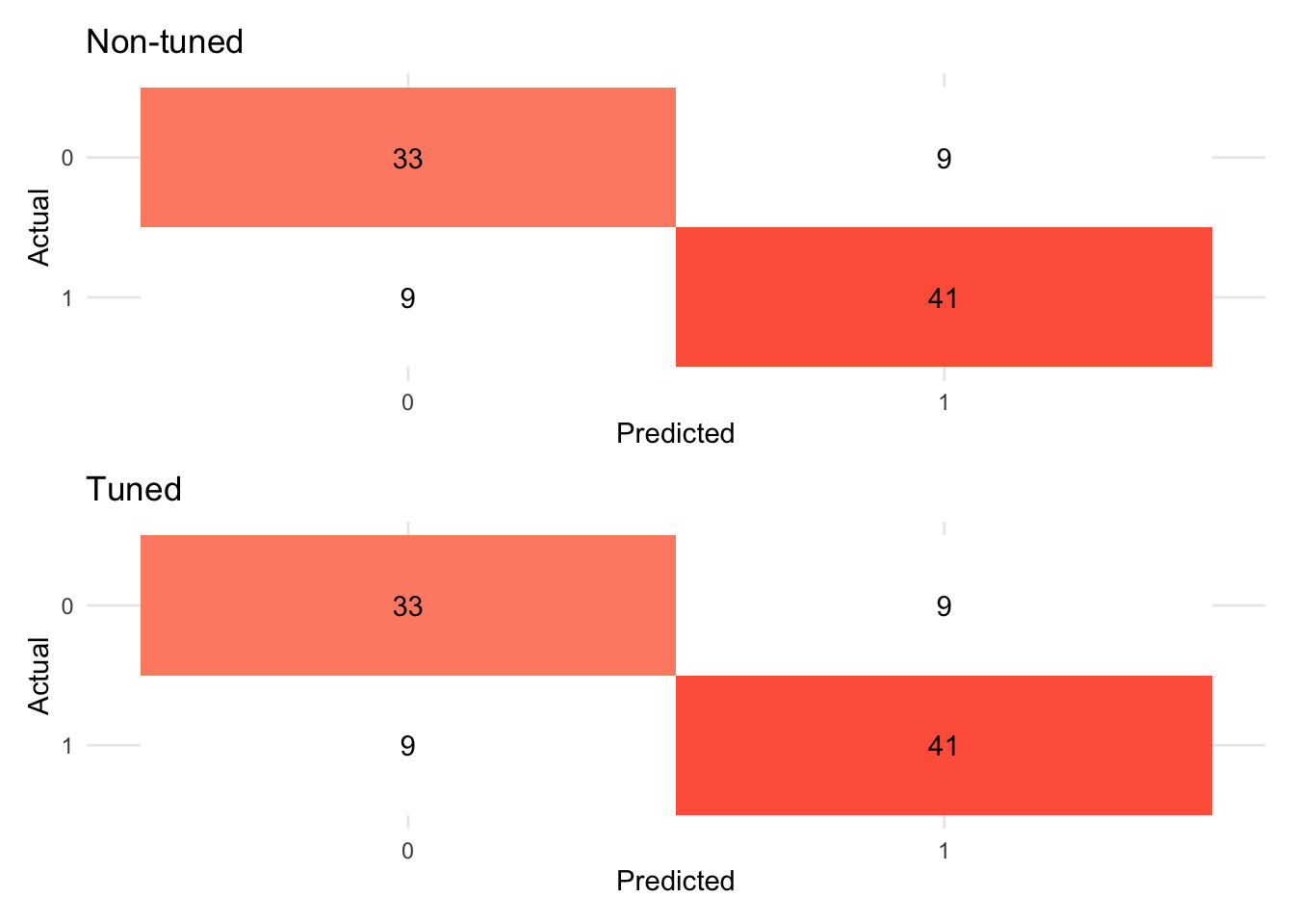

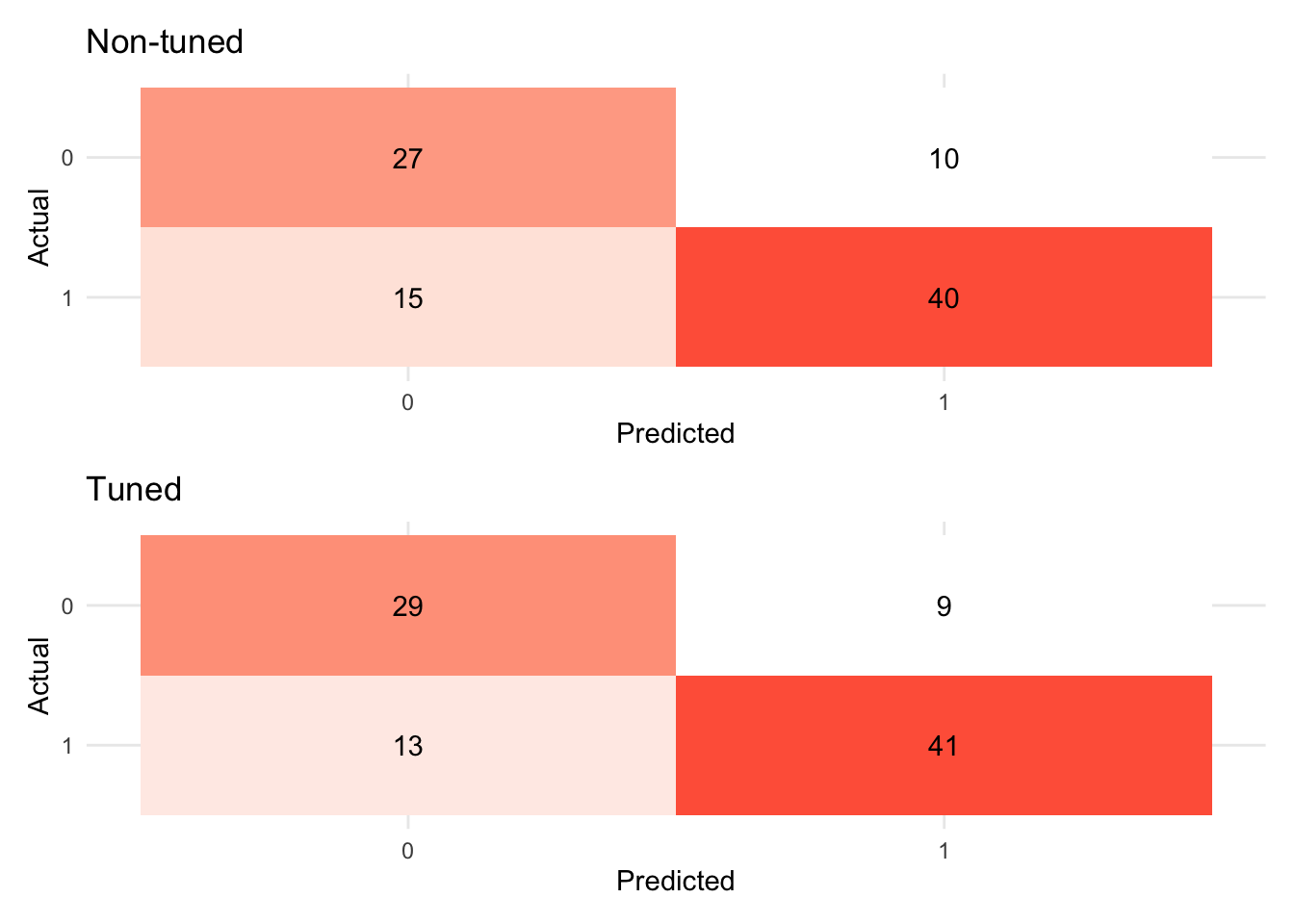

# Metrics

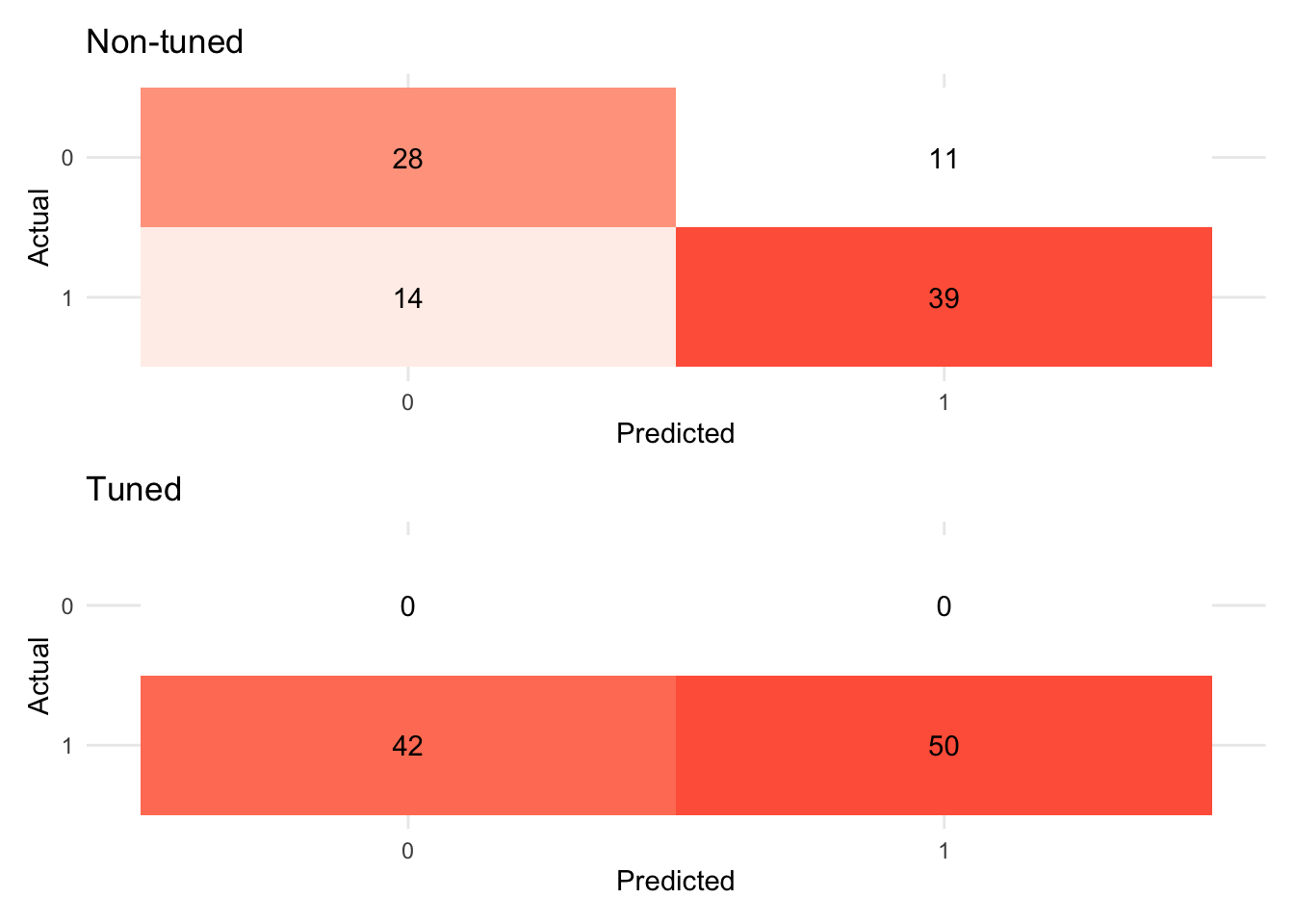

(tree_fit_viz_metr + labs(title = "Non-tuned")) / (visualize_class_eval(tree_fit_tuned) + labs(title = "Tuned"))

# Confusion matrix

(tree_fit_viz_mat + labs(title = "Non-tuned")) / (visualize_class_conf(tree_fit_tuned) + labs(title = "Tuned"))

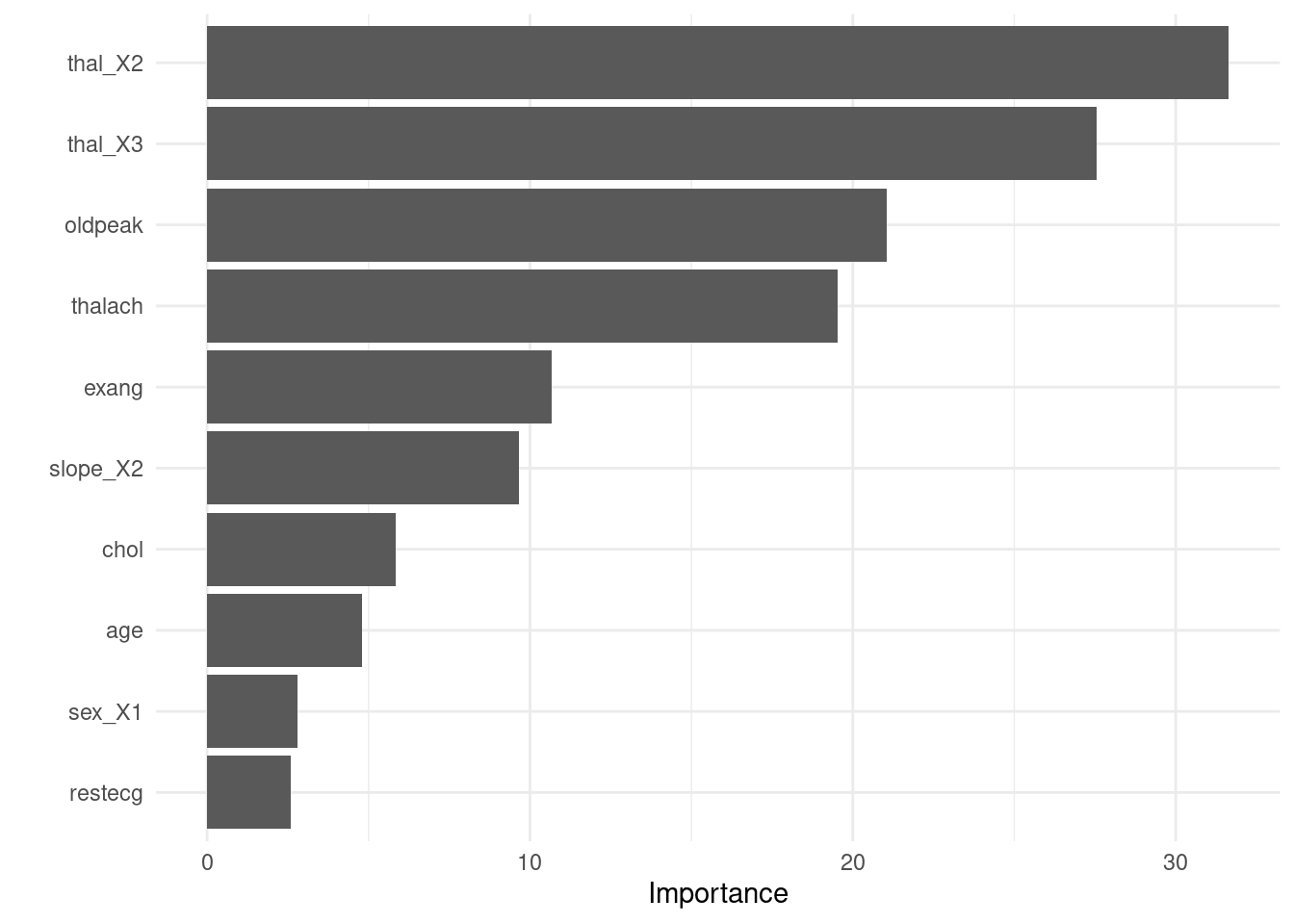

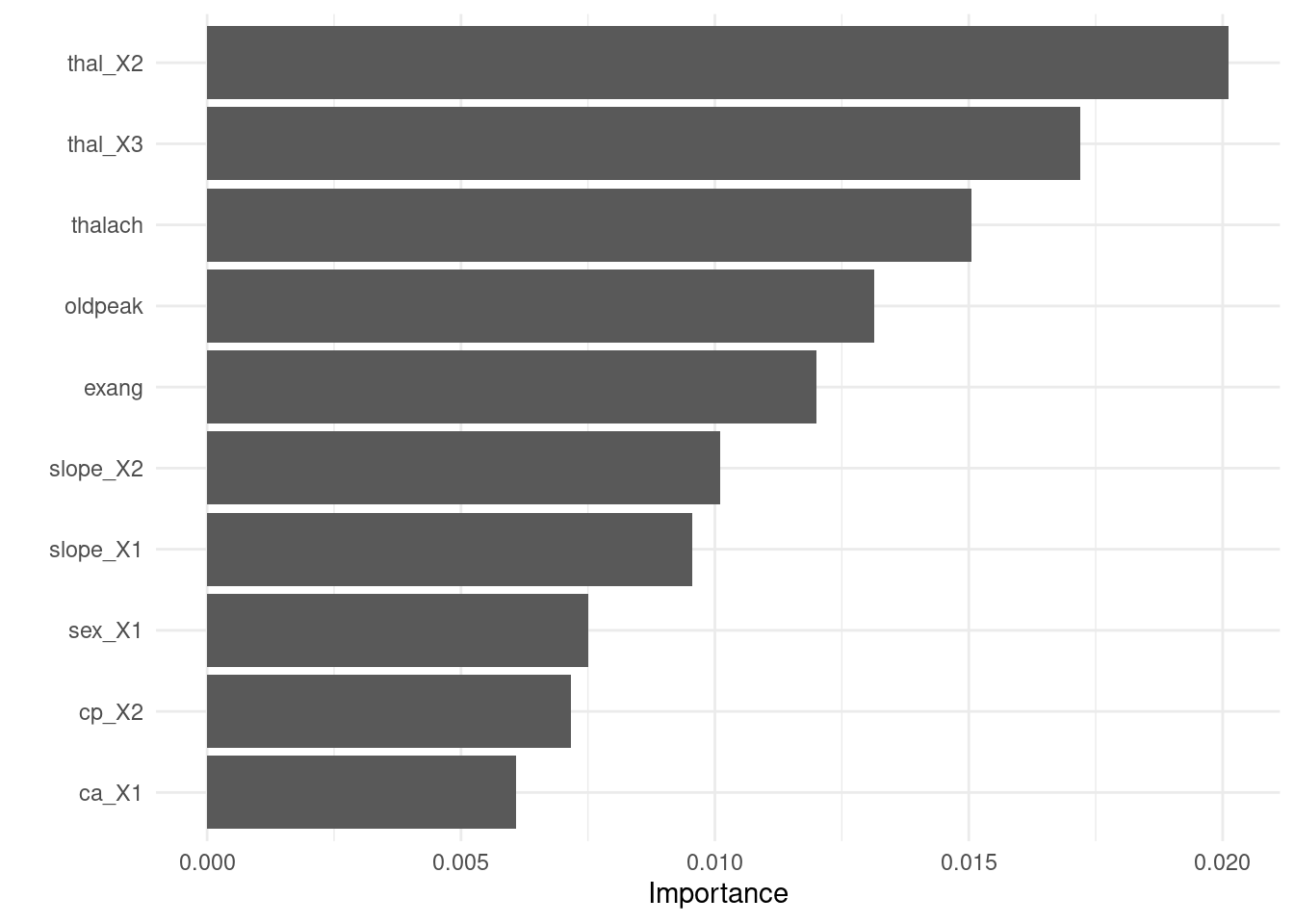

- Visualize variable importance

8.6.2.3.5 Test fit

- Apply the tuned model to the test dataset

test_fit <- finalize_tree %>%

fit(test_x_class %>% bind_cols(tibble(target = test_y_class)))

evaluate_class(test_fit)## # A tibble: 3 × 3

## .metric .estimator .estimate

## <chr> <chr> <dbl>

## 1 accuracy binary 0.761

## 2 precision binary 0.778

## 3 recall binary 0.667In the next subsection, we will learn variants of ensemble models that improve decision tree models by putting models together.

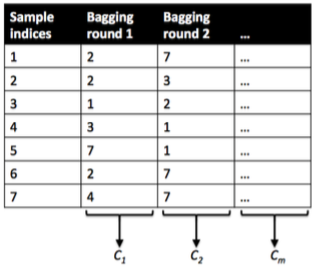

8.6.3 Bagging (Random forest)

Key idea applied across all ensemble models (bagging, boosting, and stacking): single learner -> N learners (N > 1)

Many learners could perform better than a single learner as this approach reduces the variance of a single estimate and provides more stability.

Here we focus on the difference between bagging and boosting. In short, boosting may reduce bias while increasing variance. On the other hand, bagging may reduce variance but has nothing to do with bias. Please check out What is the difference between Bagging and Boosting? by aporras.

bagging

Data: Training data will be randomly sampled with replacement (bootstrapping samples + drawing random subsets of features for training individual trees)

Learning: Building models in parallel (independently)

Prediction: Simple average of the estimated responses (majority vote system)

boosting

Data: Weighted training data will be random sampled

Learning: Building models sequentially (mispredicted cases would receive more weights)

Prediction: Weighted average of the estimated responses

8.6.3.1 parsnip

- Build a model

- Specify a model

- Specify an engine

- Specify a mode

# workflow

rand_wf <- workflow() %>% add_formula(target ~ .)

# spec

rand_spec <- rand_forest(

# Mode

mode = "classification",

# Tuning hyperparameters

mtry = NULL, # The number of predictors to available for splitting at each node

min_n = NULL, # The minimum number of data points needed to keep splitting nodes

trees = 500

) %>% # The number of trees

set_engine("ranger",

# We want the importance of predictors to be assessed.

seed = 1234,

importance = "permutation"

)

rand_wf <- rand_wf %>% add_model(rand_spec)- Fit a model

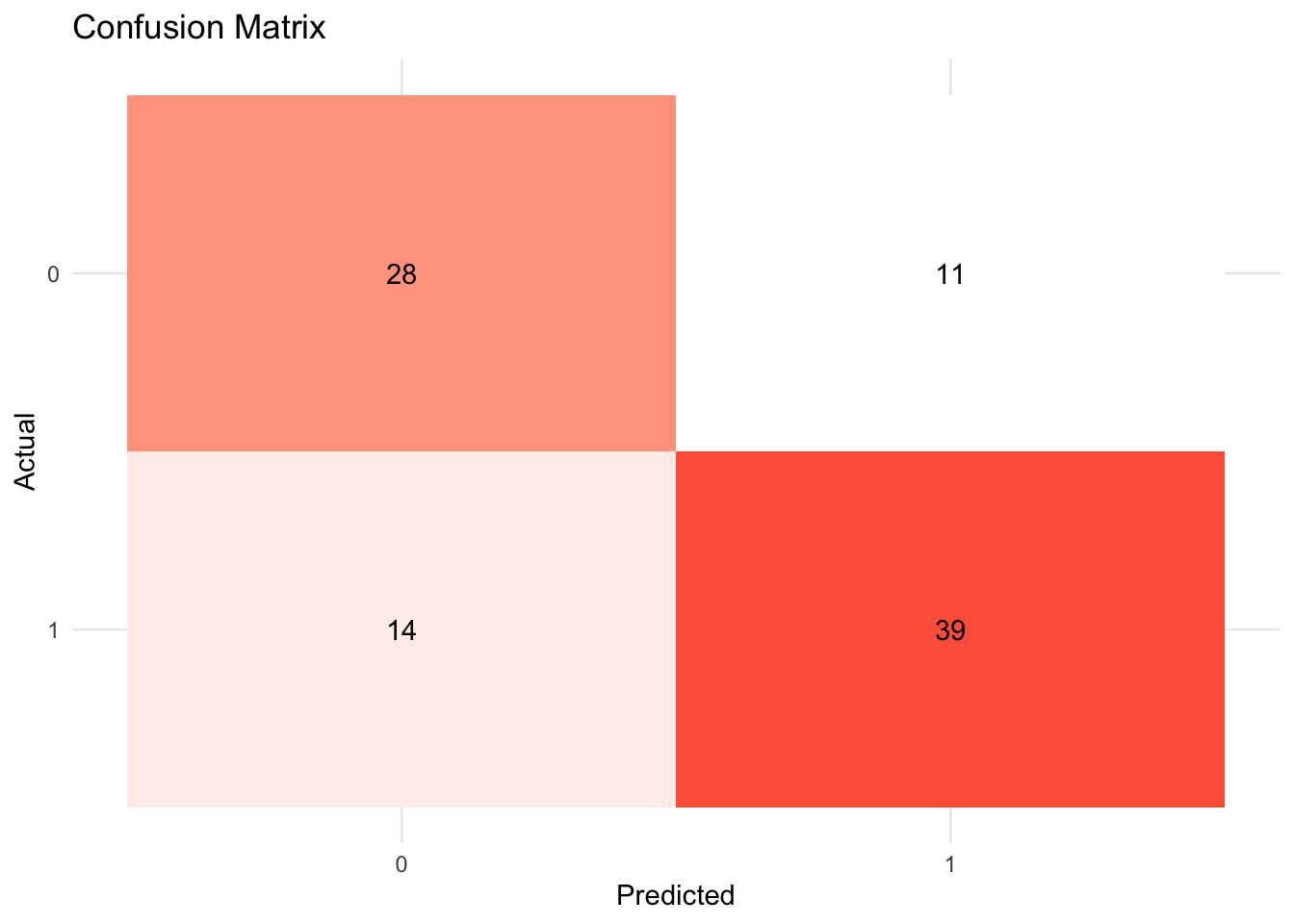

8.6.3.2 yardstick

# Define performance metrics

metrics <- yardstick::metric_set(accuracy, precision, recall)

rand_fit_viz_metr <- visualize_class_eval(rand_fit)

rand_fit_viz_metr

- Visualize the confusion matrix.

rand_fit_viz_mat <- visualize_class_conf(rand_fit)

rand_fit_viz_mat

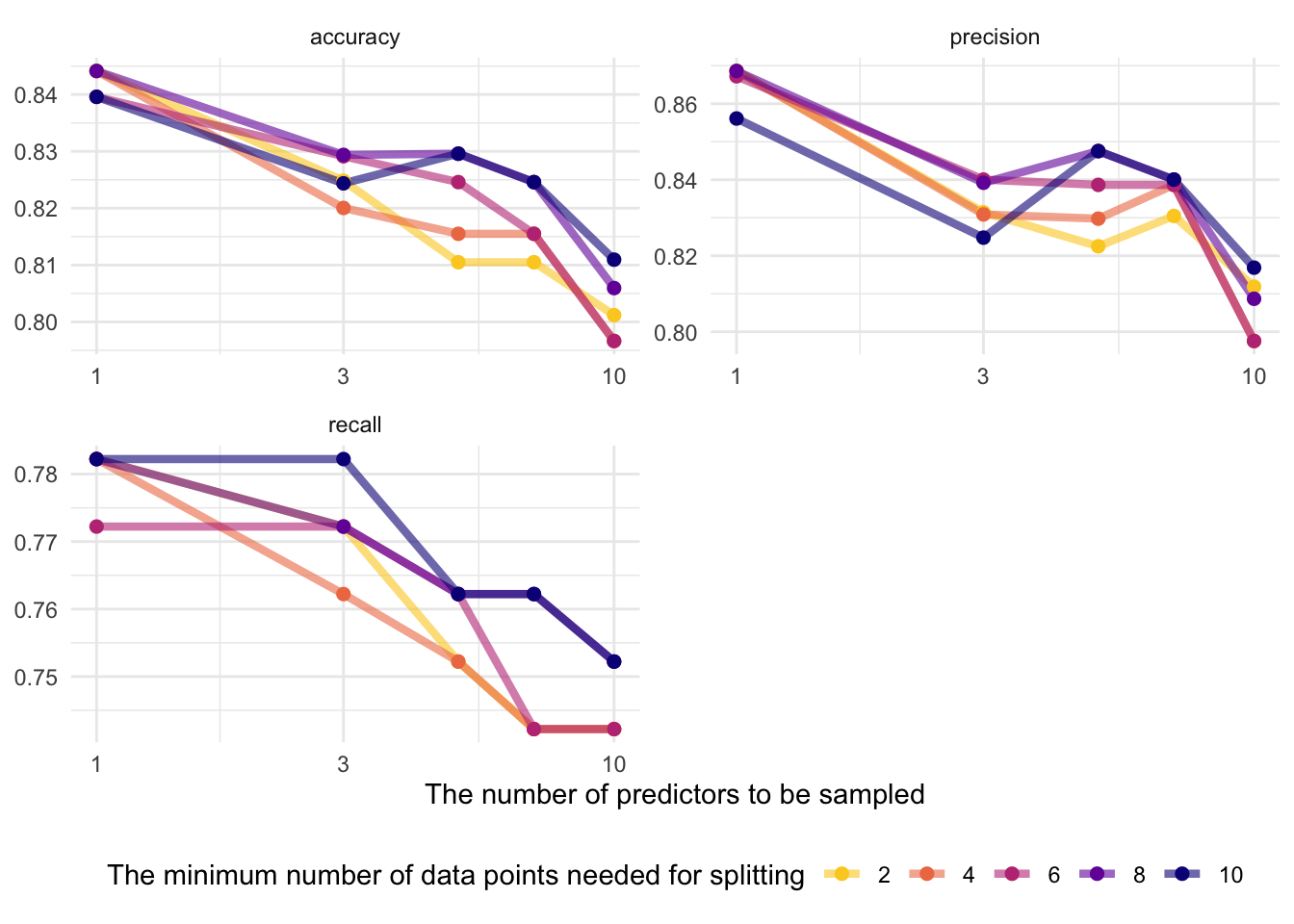

8.6.3.3 tune

8.6.3.3.1 tune ingredients

We focus on the following two hyperparameters:

mtry: The number of predictors available for splitting at each node.min_n: The minimum number of data points needed to keep splitting nodes.

8.6.3.3.3 Visualize

rand_res %>%

collect_metrics() %>%

mutate(min_n = factor(min_n)) %>%

ggplot(aes(mtry, mean, color = min_n)) +

# Line + Point plot

geom_line(size = 1.5, alpha = 0.6) +

geom_point(size = 2) +

# Subplots

facet_wrap(~.metric,

scales = "free",

nrow = 2

) +

# Log scale x

scale_x_log10(labels = scales::label_number()) +

# Discrete color scale

scale_color_viridis_d(option = "plasma", begin = .9, end = 0) +

labs(

x = "The number of predictors to be sampled",

col = "The minimum number of data points needed for splitting",

y = NULL

) +

theme(legend.position = "bottom")

# Optimal hyperparameter

best_tree <- tune::select_best(rand_res, metric = "accuracy")

# Add the hyperparameter to the workflow

finalize_tree <- rand_wf %>%

finalize_workflow(best_tree)

rand_fit_tuned <- finalize_tree %>%

fit(train_x_class %>% bind_cols(tibble(target = train_y_class)))

# Metrics

(rand_fit_viz_metr + labs(title = "Non-tuned")) / (visualize_class_eval(rand_fit_tuned) + labs(title = "Tuned"))

# Confusion matrix

(rand_fit_viz_mat + labs(title = "Non-tuned")) / (visualize_class_conf(rand_fit_tuned) + labs(title = "Tuned"))

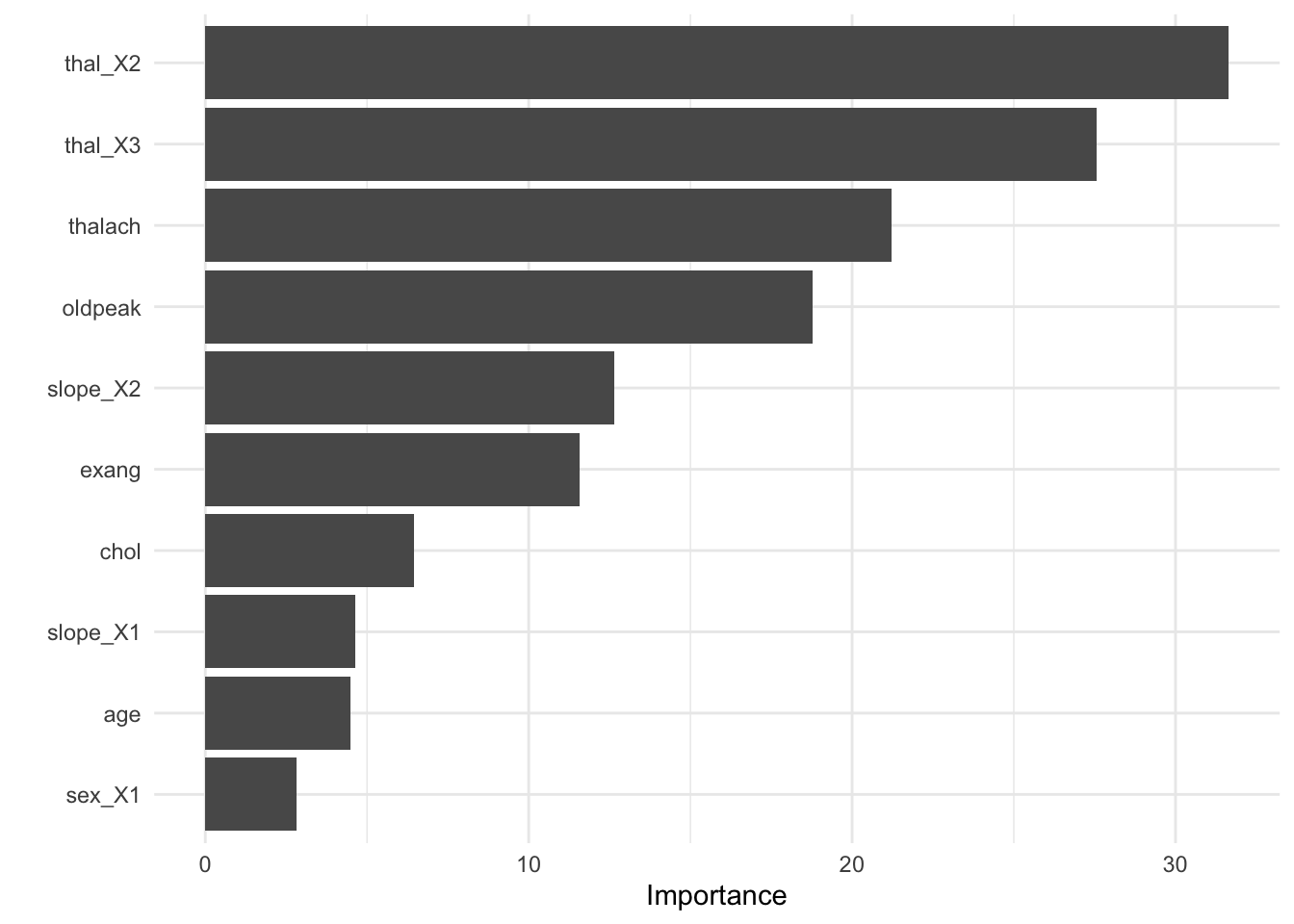

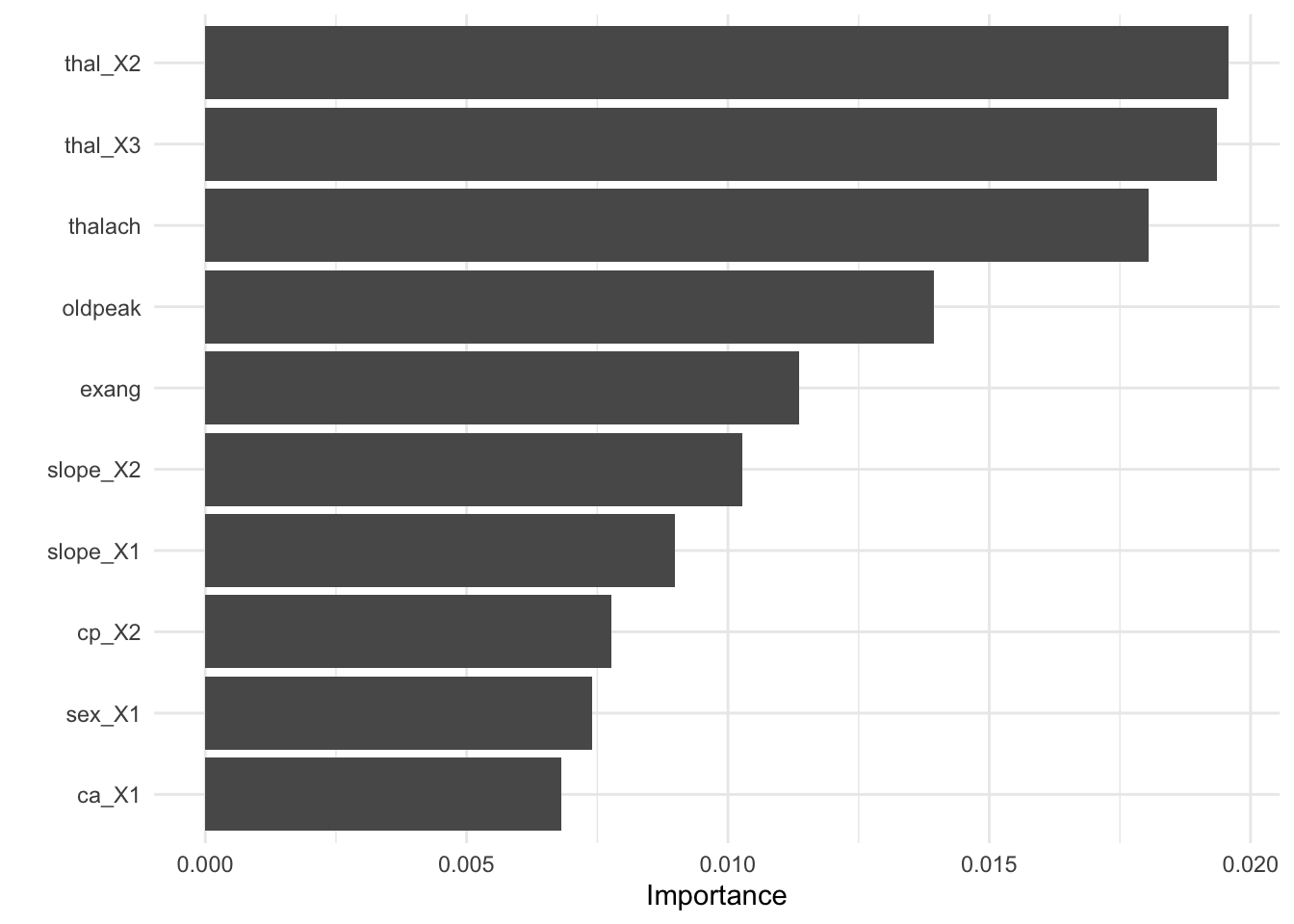

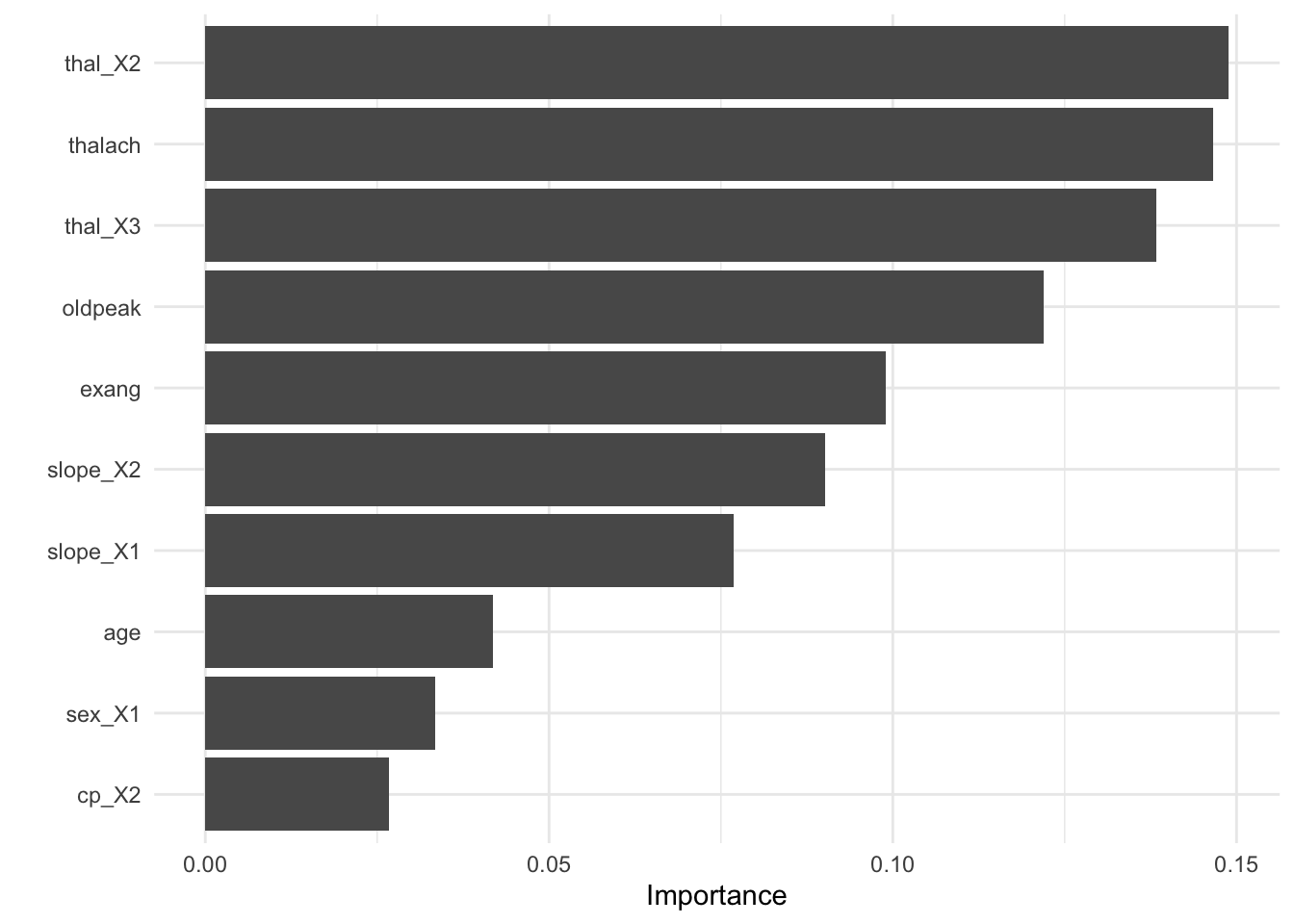

- Visualize variable importance

8.6.3.3.4 Test fit

- Apply the tuned model to the test dataset

test_fit <- finalize_tree %>%

fit(test_x_class %>%

bind_cols(tibble(target = test_y_class)))

evaluate_class(test_fit)## # A tibble: 3 × 3

## .metric .estimator .estimate

## <chr> <chr> <dbl>

## 1 accuracy binary 0.946

## 2 precision binary 0.930

## 3 recall binary 0.9528.6.4 Boosting (XGboost)

8.6.4.1 parsnip: Model specification and tuning

-

Build and tune a model

- Define a workflow with your formula

- Add a tunable model spec (with

tune()placeholders)

- Set the engine and mode

- Create resamples (e.g. stratified 5‑fold CV)

- Design a hyperparameter grid

- Tune across the grid

- Define a workflow with your formula

# 1) Define the workflow

xg_wf <- workflow() %>%

add_formula(target ~ .) %>%

add_model(

boost_tree(

trees = tune(), # to be tuned

tree_depth = tune(),

learn_rate = tune()

) %>%

set_engine("xgboost") %>%

set_mode("classification")

)

# 2) Create resamples (5-fold CV, stratified by target)

set.seed(123)

cv_splits <- vfold_cv(

data %>% mutate(target = as.factor(target)),

v = 5,

strata = target

)

# 3) Specify a tuning grid

xg_grid <- grid_latin_hypercube(

trees(range = c(50, 500)),

tree_depth(range = c(1, 10)),

learn_rate(range = c(0.01, 0.3)),

size = 8

)

# 4) Run tuning

xg_res <- tune_race_anova(

xg_wf, resamples = cv_splits, grid = xg_grid,

metrics = metric_set(accuracy, recall),

control = control_race(verbose = FALSE, parallel_over = "resamples")

)-

Fit the final model

- Select the best parameters

- Finalize the workflow

- Fit to the training set

# Select best metrics (name the metric argument explicitly)

best_params <- select_best(xg_res, metric = "accuracy")

# Finalize the workflow with those parameters

xg_wf_final <- finalize_workflow(xg_wf, best_params)

# Fit the finalized workflow

xg_fit <- fit(

xg_wf_final,

train_x_class %>%

bind_cols(tibble(target = train_y_class))

)8.6.4.2 yardstick

metrics <- metric_set(

yardstick::accuracy,

yardstick::precision,

yardstick::recall

)

evaluate_class(xg_fit)## # A tibble: 3 × 3

## .metric .estimator .estimate

## <chr> <chr> <dbl>

## 1 accuracy binary 0.728

## 2 precision binary 0.718

## 3 recall binary 0.667

xg_fit_viz_metr <-

visualize_class_eval(xg_fit)

xg_fit_viz_metr

- Visualize the confusion matrix.

xg_fit_viz_mat <-

visualize_class_conf(xg_fit)

xg_fit_viz_mat

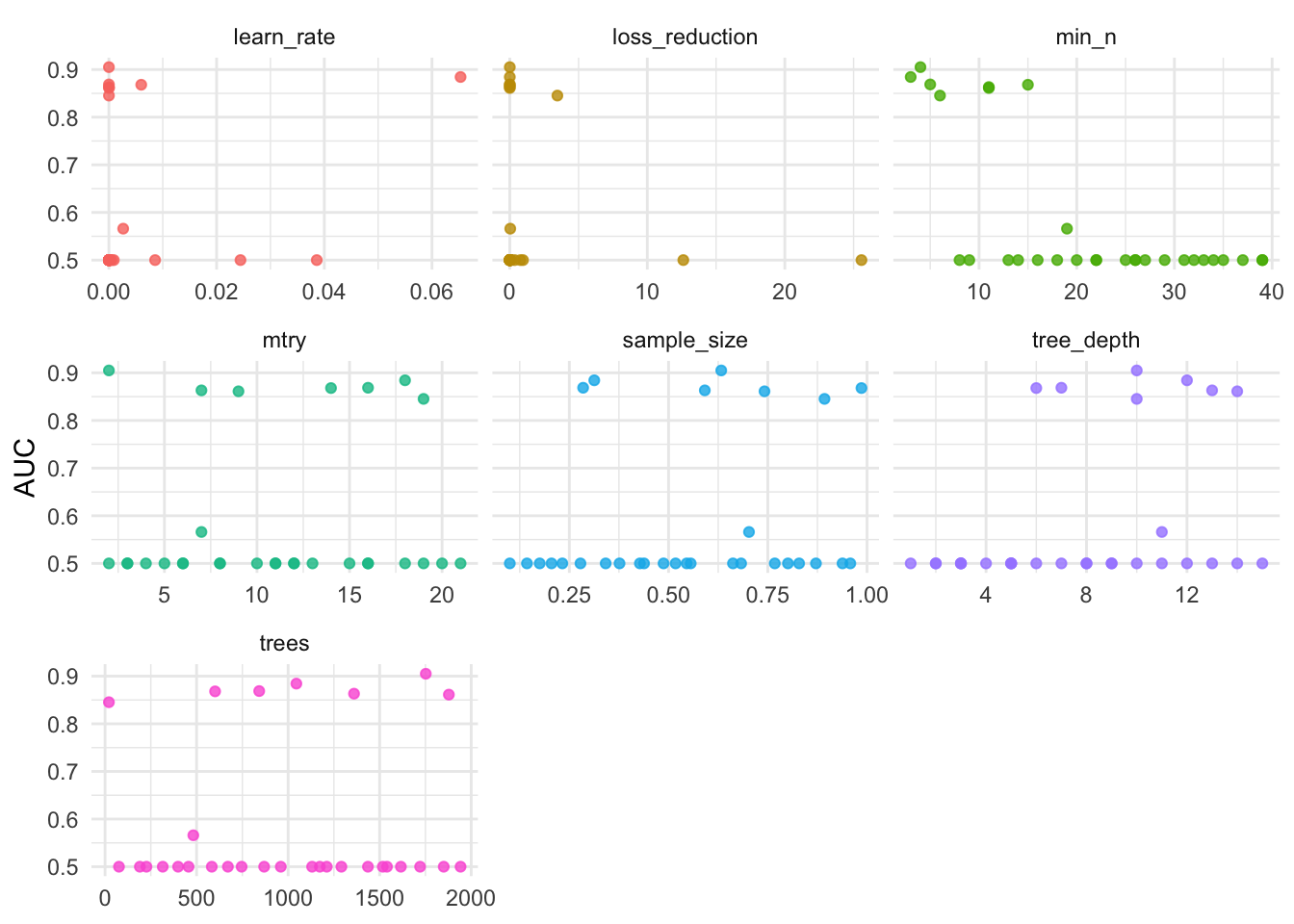

8.6.4.3 tune

8.6.4.3.1 tune ingredients

- We focus on the following hyperparameters:

trees,tree_depth,learn_rate,min_n,mtry,loss_reduction,andsample_size

tune_spec <-

xg_spec <- boost_tree(

# Mode

mode = "classification",

# Tuning hyperparameters

# The number of trees to fit, aka boosting iterations

trees = tune(),

# The depth of the decision tree (how many levels of splits).

tree_depth = tune(),

# Learning rate: lower means the ensemble will adapt more slowly.

learn_rate = tune(),

# Stop splitting a tree if we only have this many obs in a tree node.

min_n = tune(),

loss_reduction = tune(),

# The number of randomly selected hyperparameters

mtry = tune(),

# The size of the data set used for modeling within an iteration

sample_size = tune()

) %>%

set_engine("xgboost")

# Space-filling hyperparameter grids

xg_grid <- grid_latin_hypercube(

trees(),

tree_depth(),

learn_rate(),

min_n(),

loss_reduction(),

sample_size = sample_prop(),

finalize(mtry(), train_x_class),

size = 30

)

# 10-fold cross-validation

set.seed(1234) # for reproducibility

xg_folds <- vfold_cv(train_x_class %>% bind_cols(tibble(target = train_y_class)),

strata = target

)8.6.4.3.3 Visualize

conflict_prefer("filter", "dplyr")

xg_res %>%

collect_metrics() %>%

filter(.metric == "roc_auc") %>%

pivot_longer(mtry:sample_size,

values_to = "value",

names_to = "parameter"

) %>%

ggplot(aes(x = value, y = mean, color = parameter)) +

geom_point(alpha = 0.8, show.legend = FALSE) +

facet_wrap(~parameter, scales = "free_x") +

labs(

y = "AUC",

x = NULL

)

# Optimal hyperparameter

best_xg <- select_best(xg_res, metric = "roc_auc")

# Add the hyperparameter to the workflow

finalize_xg <- xg_wf %>%

finalize_workflow(best_xg)

xg_fit_tuned <- finalize_xg %>%

fit(train_x_class %>% bind_cols(tibble(target = train_y_class)))

# Metrics

(xg_fit_viz_metr + labs(title = "Non-tuned")) / (visualize_class_eval(xg_fit_tuned) + labs(title = "Tuned"))

# Confusion matrix

(xg_fit_viz_mat + labs(title = "Non-tuned")) / (visualize_class_conf(xg_fit_tuned) + labs(title = "Tuned"))

- Visualize variable importance

8.6.4.3.4 Test fit

- Apply the tuned model to the test dataset

test_fit <- finalize_xg %>%

fit(test_x_class %>% bind_cols(tibble(target = test_y_class)))

evaluate_class(test_fit)## # A tibble: 3 × 3

## .metric .estimator .estimate

## <chr> <chr> <dbl>

## 1 accuracy binary 0.543

## 2 precision binary NA

## 3 recall binary 08.6.5 Stacking (SuperLearner)

This stacking part of the book heavily relies on Chris Kennedy’s notebook.

8.6.5.1 Overview

8.6.5.1.1 Stacking

Wolpert, D.H., 1992. Stacked generalization. Neural networks, 5(2), pp.241-259.

Breiman, L., 1996. Stacked regressions. Machine learning, 24(1), pp.49-64.

8.6.5.1.2 SuperLearner

The “SuperLearner” R package is a method that simplifies ensemble learning by allowing you to simultaneously evaluate the cross-validated performance of multiple algorithms and/or a single algorithm with differently tuned hyperparameters. This is a generally advisable approach to machine learning instead of fitting single algorithms.

Let’s see how the four classification algorithms you learned in this workshop (1-lasso, 2-decision tree, 3-random forest, and 4-gradient boosted trees) compare to each other and also to 5-binary logistic regression (glm) and the 6-mean of Y as a benchmark algorithm, in terms of their cross-validated error!

A “wrapper” is a short function that adapts an algorithm for the SuperLearner package. Check out the different algorithm wrappers offered by SuperLearner:

8.6.5.2 Choose algorithms

# Review available models

SuperLearner::listWrappers()

# Compile the algorithm wrappers to be used.

sl_lib <- c(

"SL.mean", # Marginal mean of the outcome ()

"SL.glmnet", # GLM with lasso/elasticnet regularization

"SL.rpart", # Decision tree

"SL.ranger", # Random forest

"SL.xgboost"

) # Xgbboost8.6.5.3 Fit model

Fit the ensemble!

# This is a seed that is compatible with multicore parallel processing.

# See ?set.seed for more information.

set.seed(1, "L'Ecuyer-CMRG")

# This will take a few minutes to execute - take a look at the .html file to see the output!

cv_sl <- SuperLearner::CV.SuperLearner(

Y = as.numeric(as.character(train_y_class)),

X = train_x_class,

family = binomial(),

# For a real analysis we would use V = 10.

cvControl = list(V = 5L, stratifyCV = TRUE),

SL.library = sl_lib,

verbose = FALSE

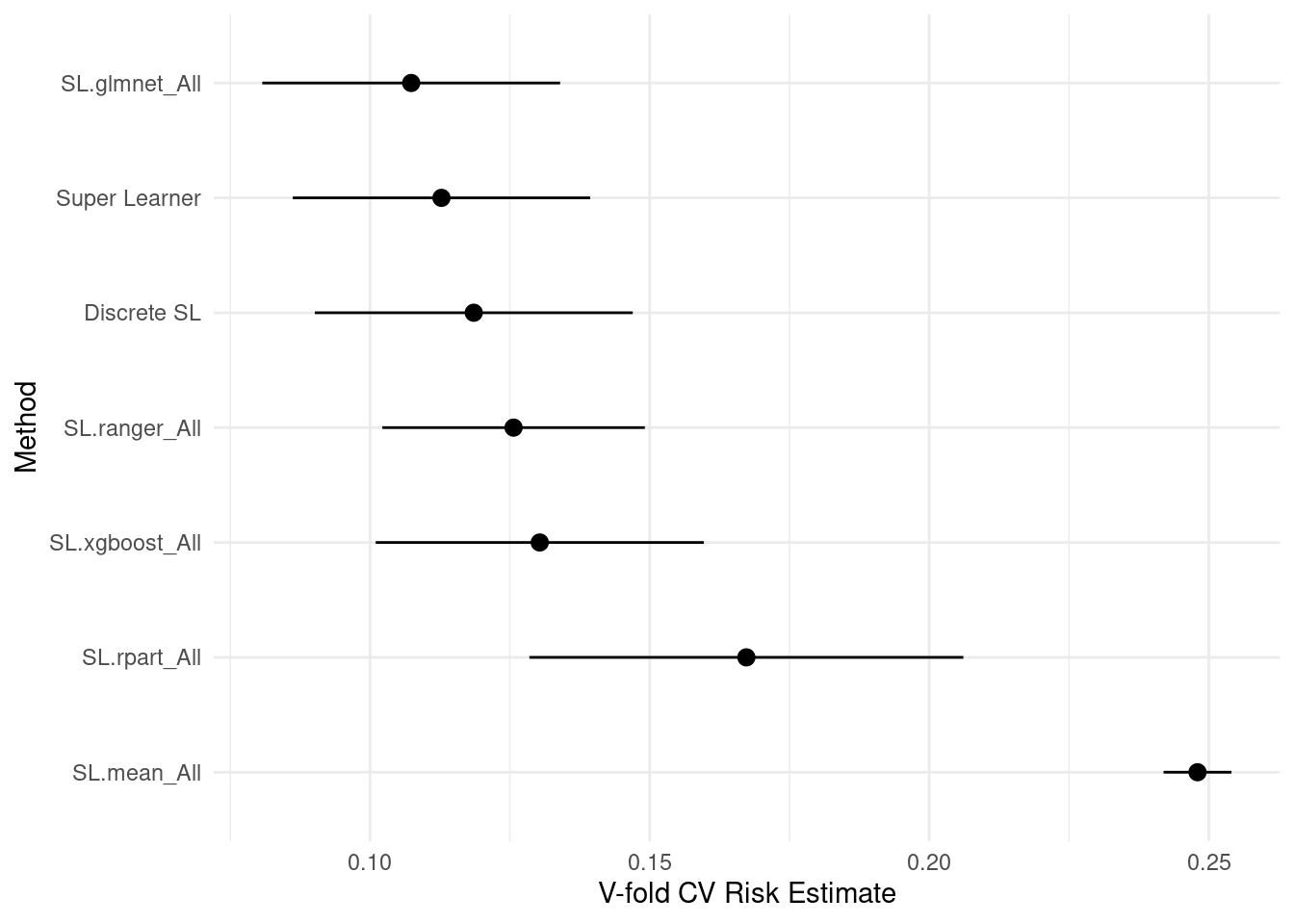

)8.6.5.4 Risk

Risk is the average loss, and loss is how far off the prediction was for an individual observation. The lower the risk, the fewer errors the model makes in its prediction. SuperLearner’s default loss metric is squared error \((y_{actual} - y_{predicted})^2\), so the risk is the mean-squared error (just like in ordinary least squares regression). View the summary, plot results, and compute the Area Under the ROC Curve (AUC)!

8.6.5.4.1 Summary

-

Discrete SLchooses the best single learner (in this case,SL.glmnetorlasso). -

SuperLearnertakes a weighted average of the models using the coefficients (importance of each learner in the overall ensemble). Coefficient 0 means that learner is not used at all. -

SL.mean_All(the weighted mean of \(Y\)) is a benchmark algorithm (ignoring features).

summary(cv_sl)8.6.5.5 Compute AUC for all estimators

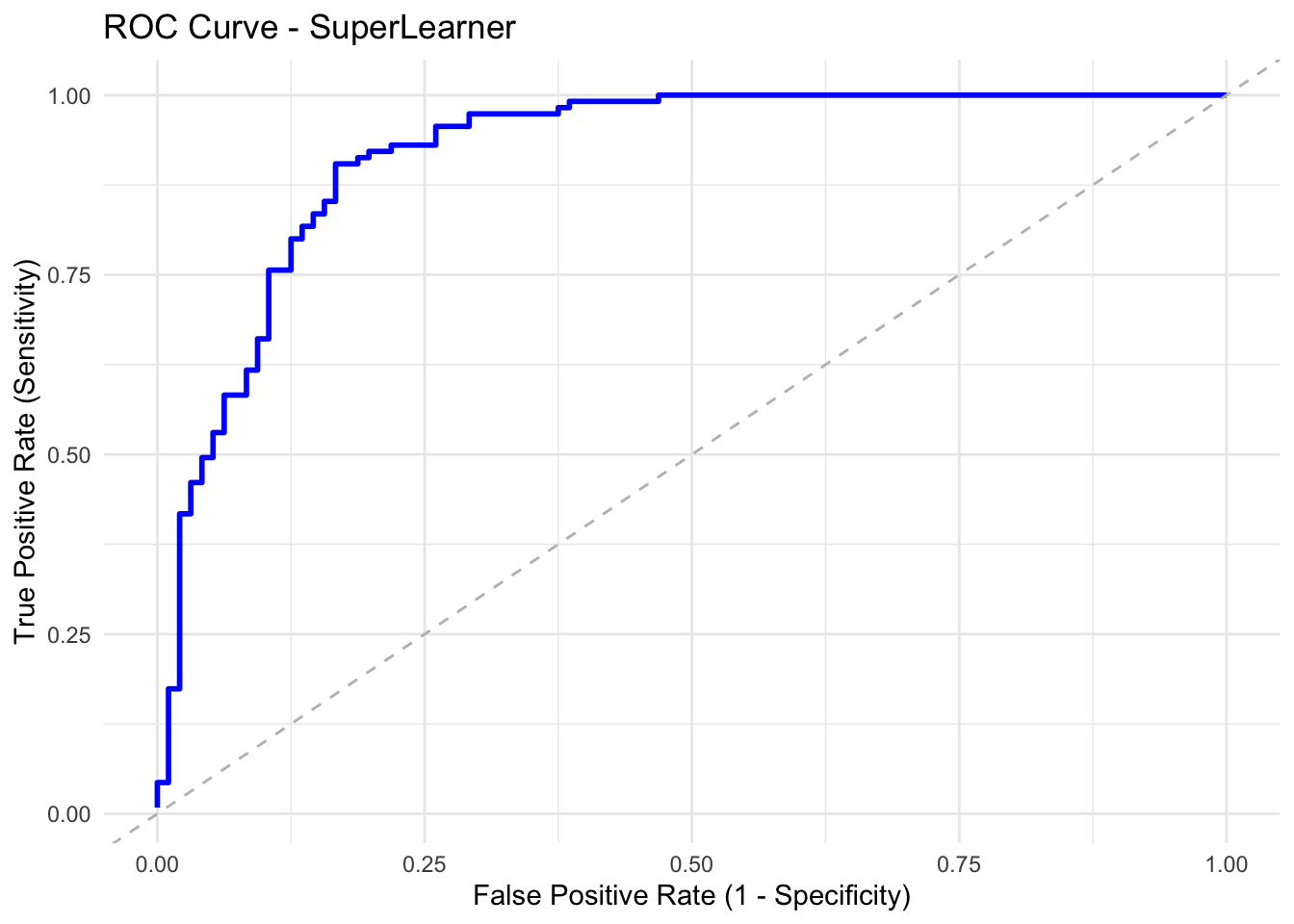

ROC

ROC: a ROC (receiver operating characteristic curve) plots the relationship between True Positive Rate (Y-axis) and FALSE Positive Rate (X-axis).

AUC

AUC: Area Under the ROC Curve

1 = perfect

0.5 = no better than chance

# Summary of cross-validated SuperLearner

# Shows risk estimates and coefficients for each learner

summary(cv_sl)8.6.5.5.1 Plot the ROC curve for the best estimator (Discrete SL)

# Plot ROC curve for SuperLearner

pred <- cv_sl$SL.predict

truth <- cv_sl$Y

# Calculate ROC points

roc_data <- data.frame(pred = pred, truth = truth) %>%

arrange(desc(pred)) %>%

mutate(

tpr = cumsum(truth) / sum(truth),

fpr = cumsum(!truth) / sum(!truth)

)

ggplot(roc_data, aes(x = fpr, y = tpr)) +

geom_line(color = "blue", linewidth = 1) +

geom_abline(slope = 1, intercept = 0, linetype = "dashed", color = "gray") +

labs(

title = "ROC Curve - SuperLearner",

x = "False Positive Rate (1 - Specificity)",

y = "True Positive Rate (Sensitivity)"

) +

theme_minimal()

8.6.5.5.2 Review weight distribution for the SuperLearner

# Display SuperLearner weights

# The coef vector shows the weight assigned to each learner in the ensemble

coef_vec <- as.numeric(cv_sl$coef)

sl_weights <- data.frame(

Algorithm = cv_sl$libraryNames,

Weight = round(coef_vec, 4)

)

sl_weights <- sl_weights[order(sl_weights$Weight, decreasing = TRUE), ]

print(sl_weights, row.names = FALSE)The general stacking approach is available in the tidymodels framework through stacks package (developmental stage).

However, SuperLearner is currently not available in the tidymodels framework. You can easily build and add a parsnip model if you’d like to. If you are interested in knowing more about it, please look at this vignette of the tidymodels.

8.7 Unsupervised learning

x -> f - > y (not defined)

8.7.1 Dimension reduction

8.7.1.1 Correlation analysis

This dataset is a good problem for PCA as some features are highly correlated.

Again, think about what the dataset is about. The following data dictionary comes from this site.

- age - age in years

- sex - sex (1 = male; 0 = female)

- cp - chest pain type (1 = typical angina; 2 = atypical angina; 3 = non-anginal pain; 4 = asymptomatic)

- trestbps - resting blood pressure (in mm Hg on admission to the hospital)

- chol - serum cholestoral in mg/dl

- fbs - fasting blood sugar > 120 mg/dl (1 = true; 0 = false)

- restecg - resting electrocardiographic results (0 = normal; 1 = having ST-T; 2 = hypertrophy)

- thalach - maximum heart rate achieved

- exang - exercise induced angina (1 = yes; 0 = no)

- oldpeak - ST depression induced by exercise relative to rest

- slope - the slope of the peak exercise ST segment (1 = upsloping; 2 = flat; 3 = downsloping)

- ca - number of major vessels (0-3) colored by flourosopy

- thal - 3 = normal; 6 = fixed defect; 7 = reversable defect

- num - the predicted attribute - diagnosis of heart disease (angiographic disease status) (Value 0 = < 50% diameter narrowing; Value 1 = > 50% diameter narrowing)

8.7.1.2 Descriptive statistics

Notice the scaling issues? PCA is not scale-invariant. So, we need to fix this problem.

8.7.1.4 PCA analysis

pca_res <- pca_recipe %>%

step_pca(all_predictors(),

id = "pca"

) %>% # id argument identifies each PCA step

prep()

pca_res %>%

tidy(id = "pca")8.7.1.4.1 Screeplot

# To avoid conflicts

conflict_prefer("filter", "dplyr")

conflict_prefer("select", "dplyr")

pca_recipe %>%

step_pca(all_predictors(),

id = "pca"

) %>% # id argument identifies each PCA step

prep() %>%

tidy(id = "pca", type = "variance") %>%

filter(terms == "percent variance") %>%

ggplot(aes(x = component, y = value)) +

geom_col() +

labs(

x = "PCAs of heart disease",

y = "% of variance",

title = "Scree plot"

)

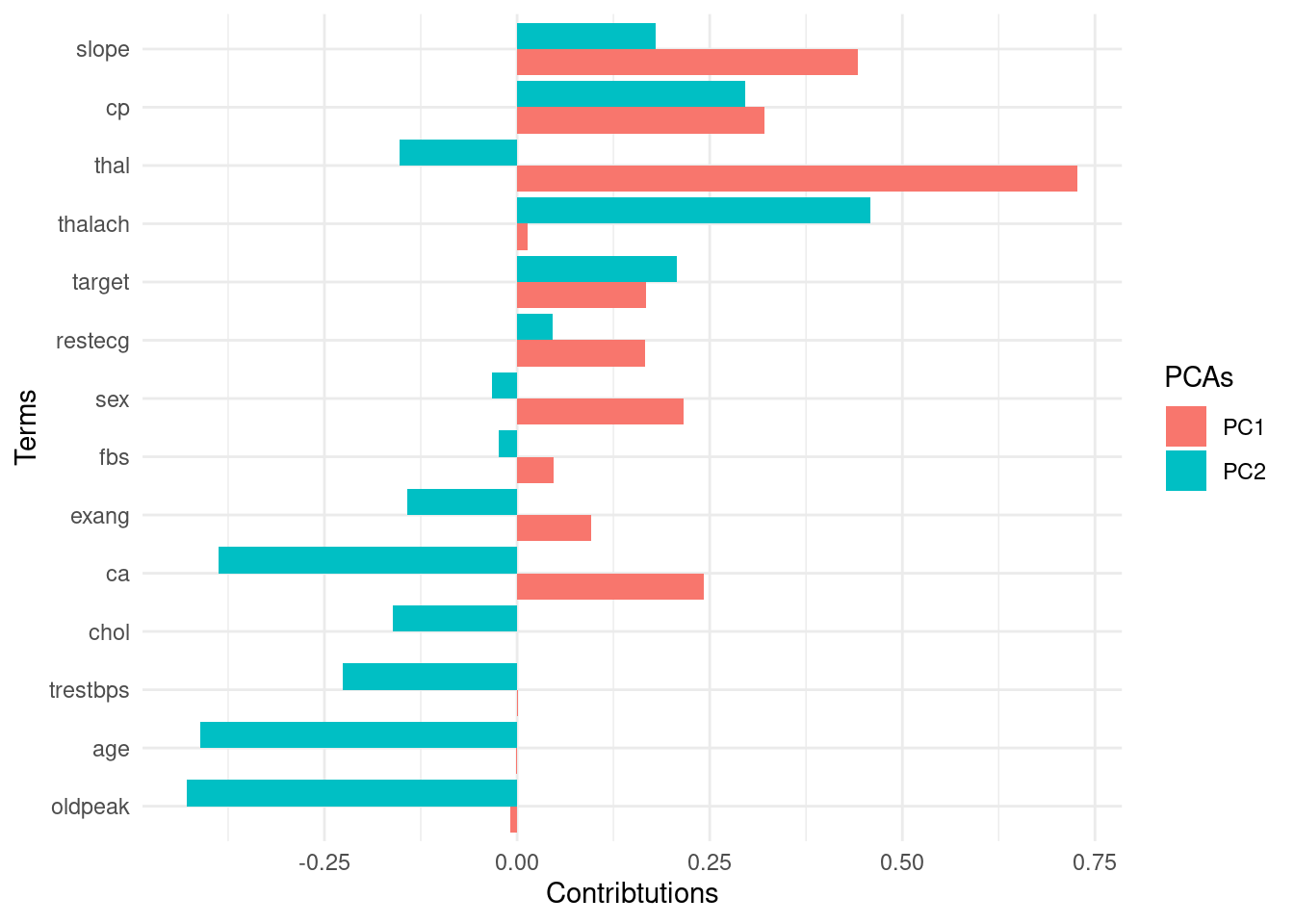

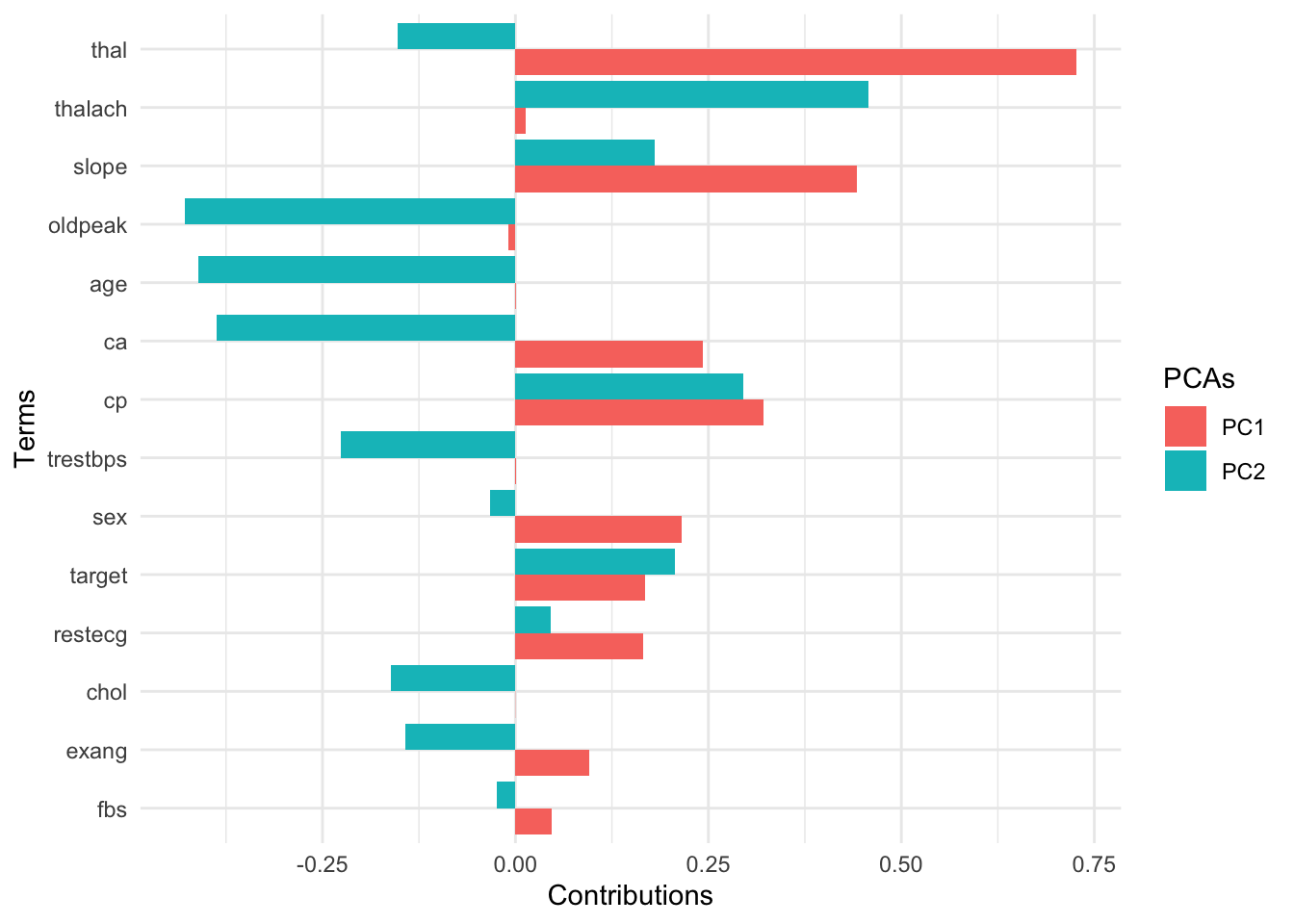

8.7.1.4.2 View factor loadings

Loadings are the covariances between the features and the principal components (=eigenvectors).

pca_recipe %>%

step_pca(all_predictors(),

id = "pca"

) %>% # id argument identifies each PCA step

prep() %>%

tidy(id = "pca") %>%

filter(component %in% c("PC1", "PC2")) %>%

mutate(terms = fct_reorder(terms, abs(value), .fun = max)) %>%

ggplot(aes(

x = terms, y = value,

fill = component

)) +

geom_col(position = "dodge") +

coord_flip() +

labs(

x = "Terms",

y = "Contributions",

fill = "PCAs"

)

The key lesson

You can use these low-dimensional data to solve the curse of dimensionality problem. Compressing feature space via dimension reduction techniques is called feature extraction. PCA is one way of doing this.

8.7.2 Topic modeling

8.7.2.1 Setup

pacman::p_load(

janeaustenr, # example text

tidytext, # tidy text analysis

glue, # paste string and objects

stm, # structural topic modeling

gutenbergr

) # toy datasets8.7.2.2 Dataset

# 1. Load all of Jane Austen's novels

austen_raw <- austen_books()

# 2. Add a chapter index (so you can facet or group by chapter if desired)

austen_chapters <- austen_raw %>%

group_by(book) %>%

mutate(

linenumber = row_number(),

chapter = cumsum(str_detect(text, regex("^chapter [0-9ivxlc]+", ignore_case = TRUE)))

) %>%

ungroup()

# 3. Tokenize and count words by book

austen_words <- austen_chapters %>%

unnest_tokens(word, text) %>%

count(book, word, sort = TRUE) %>%

group_by(book) %>%

mutate(

total = sum(n),

freq = n / total

) %>%

ungroup()8.7.2.3 Key ideas

Main papers: See Latent Dirichlet Allocation by David M. Blei, Andrew Y. Ng and Michael I. Jordan (then all Berkeley) and this follow-up paper with the same title.

Topics as distributions of words (\(\beta\) distribution)

Documents as distributions of topics (\(\alpha\) distribution)

-

What distributions?

Probability

Multinomial

Words lie on a lower-dimensional space (dimension reduction akin to PCA)

Co-occurrence of words (clustering)

-

Bag of words (feature engineering)

- Upside: easy and fast (also working quite well)

- Downside: ignored grammatical structures and rich interactions among words (Alternative: word embeddings. Please check out text2vec)

Documents are exchangeable (sequencing won’t matter).

Topics are independent (uncorrelated). If you don’t think this assumption holds, use Correlated Topics Models by Blei and Lafferty (2007).

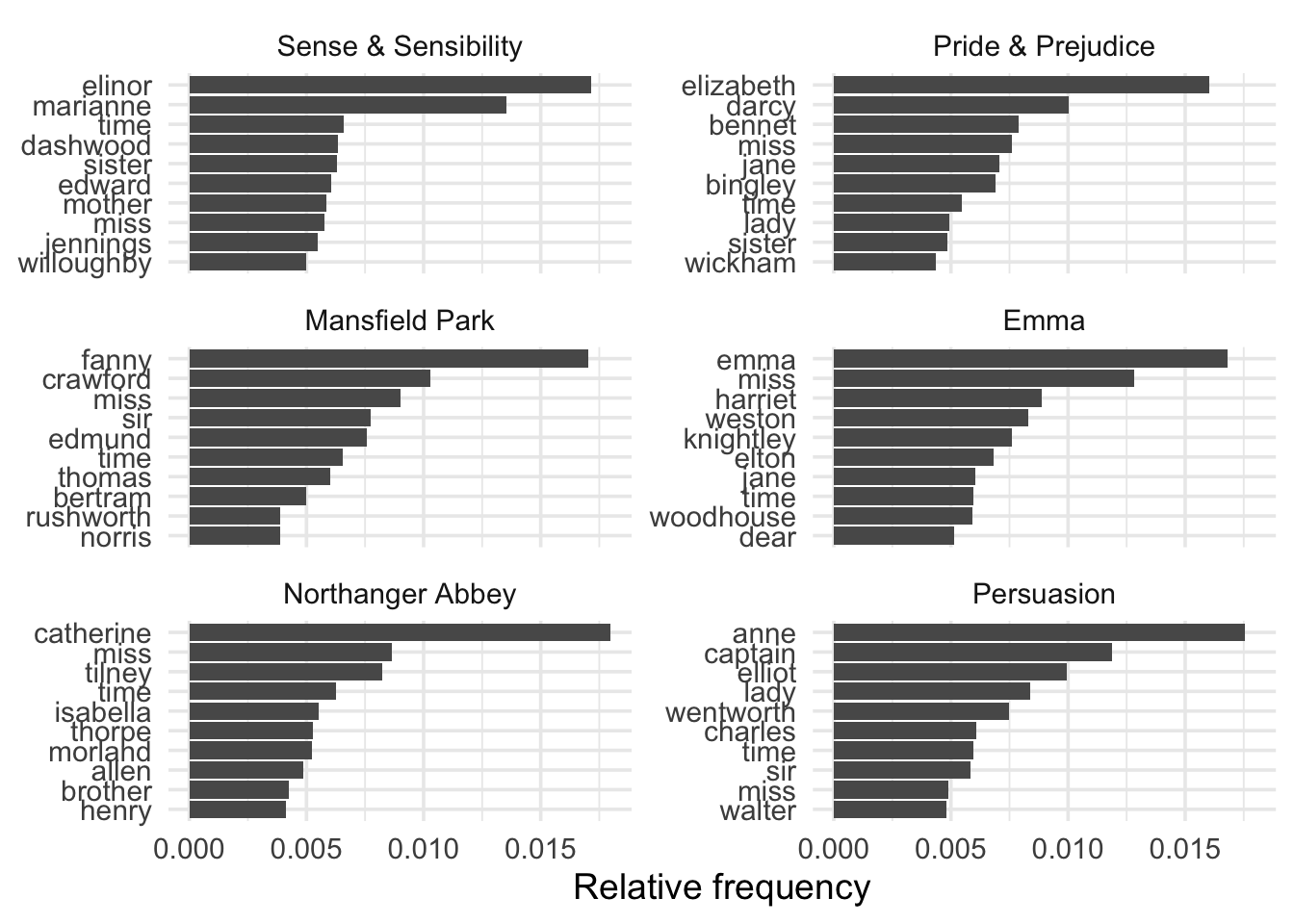

8.7.2.4 Exploratory data analysis

# 1) Tokenize, remove stop words, & count

austen_words <- austen_chapters %>%

unnest_tokens(word, text) %>%

anti_join(stop_words, by = "word") %>% # remove common stop words

count(book, word, sort = TRUE) %>%

group_by(book) %>%

mutate(

total = sum(n),

freq = n / total

) %>%

ungroup()

# 2) Pick top 10 words per book

top_words <- austen_words %>%

group_by(book) %>%

slice_max(order_by = freq, n = 10) %>%

ungroup()

# 3) Plot the top words

top_words %>%

mutate(word = tidytext::reorder_within(word, freq, book)) %>%

ggplot(aes(x = word, y = freq)) +

geom_col() +

facet_wrap(~book, scales = "free_y", ncol = 2) +

coord_flip() +

tidytext::scale_x_reordered() +

labs(

x = NULL,

y = "Relative frequency"

) +

theme_minimal(base_size = 14) +

theme(legend.position = "none")

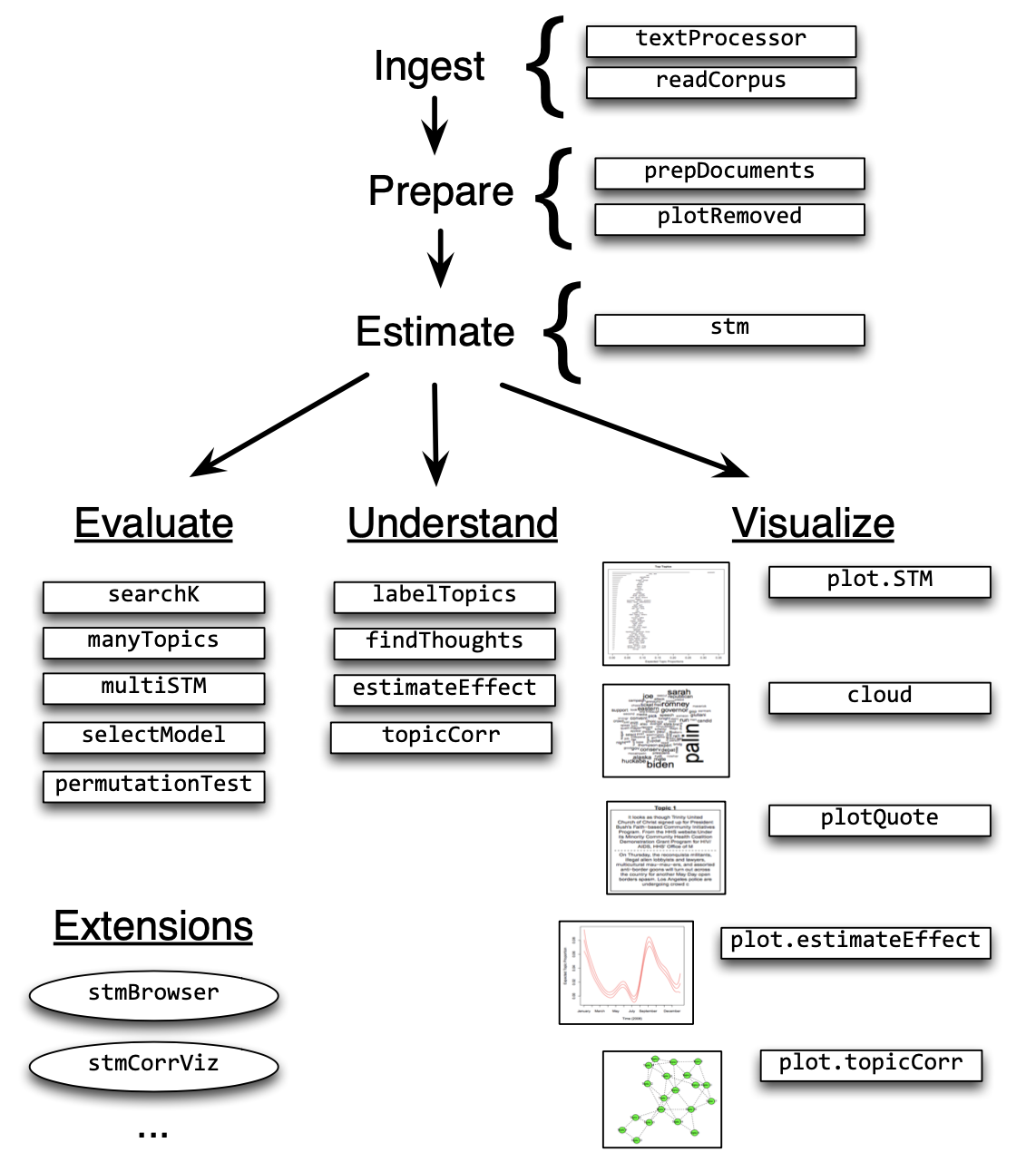

8.7.2.5 STM

Structural Topic Modeling by Roberts, Stewart, and Tingley helps estimate how topics’ proportions vary by covariates. If you don’t use covariates, this approach is close to CTM. The other useful (and very recent) topic modeling package is Keyword Assisted Topic Models (keyATM) by Shusei, Imai, and Sasaki.

Also, note that we didn’t cover other important techniques in topic modeling, such as dynamic and hierarchical topic modeling.

8.7.2.5.1 Turn text into document-term matrix

stm package has its preprocessing function.

dtm <- textProcessor(

documents = austen_chapters$text,

metadata = austen_chapters,

removestopwords = TRUE,

verbose = FALSE

)8.7.2.5.2 Tuning K

- K is the number of topics.

- Let’s try K = 5, 10, 15.

test_res <- searchK(

dtm$documents,

dtm$vocab,

K = c(5, 10),

prevalence = ~book,

data = dtm$meta

)## Beginning Spectral Initialization

## Calculating the gram matrix...

## Using only 10000 most frequent terms during initialization...

## Finding anchor words...

## .....

## Recovering initialization...

## ...................................................................................................

## Initialization complete.

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 1 (approx. per word bound = -7.740)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 2 (approx. per word bound = -7.555, relative change = 2.379e-02)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 3 (approx. per word bound = -7.482, relative change = 9.775e-03)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 4 (approx. per word bound = -7.442, relative change = 5.291e-03)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 5 (approx. per word bound = -7.419, relative change = 3.059e-03)

## Topic 1: much, one, never, see, can

## Topic 2: look, ladi, thought, alway, howev

## Topic 3: will, said, time, might, good

## Topic 4: mrs, miss, think, everi, now

## Topic 5: must, know, well, feel, make

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 6 (approx. per word bound = -7.405, relative change = 1.866e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 7 (approx. per word bound = -7.396, relative change = 1.197e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 8 (approx. per word bound = -7.391, relative change = 8.019e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 9 (approx. per word bound = -7.386, relative change = 5.579e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 10 (approx. per word bound = -7.383, relative change = 4.007e-04)

## Topic 1: much, one, never, see, can

## Topic 2: look, ladi, thought, alway, howev

## Topic 3: will, said, time, might, good

## Topic 4: mrs, miss, think, everi, now

## Topic 5: must, know, well, feel, make

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 11 (approx. per word bound = -7.381, relative change = 2.955e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 12 (approx. per word bound = -7.380, relative change = 2.227e-04)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 13 (approx. per word bound = -7.378, relative change = 1.710e-04)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 14 (approx. per word bound = -7.377, relative change = 1.333e-04)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 15 (approx. per word bound = -7.377, relative change = 1.055e-04)

## Topic 1: much, one, never, see, can

## Topic 2: look, ladi, thought, alway, howev

## Topic 3: will, said, time, might, good

## Topic 4: mrs, miss, think, everi, now

## Topic 5: must, know, well, feel, make

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 16 (approx. per word bound = -7.376, relative change = 8.454e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 17 (approx. per word bound = -7.375, relative change = 6.850e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 18 (approx. per word bound = -7.375, relative change = 5.602e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 19 (approx. per word bound = -7.375, relative change = 4.618e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 20 (approx. per word bound = -7.374, relative change = 3.837e-05)

## Topic 1: much, one, never, see, can

## Topic 2: look, ladi, thought, alway, howev

## Topic 3: will, said, time, might, good

## Topic 4: mrs, miss, think, everi, now

## Topic 5: must, know, well, feel, make

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 21 (approx. per word bound = -7.374, relative change = 3.212e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 22 (approx. per word bound = -7.374, relative change = 2.705e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 23 (approx. per word bound = -7.374, relative change = 2.289e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 24 (approx. per word bound = -7.374, relative change = 1.941e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 25 (approx. per word bound = -7.374, relative change = 1.653e-05)

## Topic 1: much, one, never, see, can

## Topic 2: look, ladi, thought, alway, howev

## Topic 3: will, said, time, might, good

## Topic 4: mrs, miss, think, everi, now

## Topic 5: must, know, well, feel, make

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 26 (approx. per word bound = -7.373, relative change = 1.416e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 27 (approx. per word bound = -7.373, relative change = 1.223e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Completing Iteration 28 (approx. per word bound = -7.373, relative change = 1.062e-05)

## ....................................................................................................

## Completed E-Step (2 seconds).

## Completed M-Step.

## Model Converged

## Beginning Spectral Initialization

## Calculating the gram matrix...

## Using only 10000 most frequent terms during initialization...

## Finding anchor words...

## ..........

## Recovering initialization...

## ...................................................................................................

## Initialization complete.

## ....................................................................................................

## Completed E-Step (5 seconds).

## Completed M-Step.

## Completing Iteration 1 (approx. per word bound = -7.839)

## ....................................................................................................

## Completed E-Step (4 seconds).

## Completed M-Step.

## Completing Iteration 2 (approx. per word bound = -7.662, relative change = 2.250e-02)

## ....................................................................................................

## Completed E-Step (4 seconds).

## Completed M-Step.

## Completing Iteration 3 (approx. per word bound = -7.559, relative change = 1.354e-02)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 4 (approx. per word bound = -7.504, relative change = 7.255e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 5 (approx. per word bound = -7.469, relative change = 4.686e-03)

## Topic 1: much, know, littl, look, wish

## Topic 2: sister, think, come, mother, way

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, first, day, happi, elizabeth

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, ever, father, made

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 6 (approx. per word bound = -7.443, relative change = 3.389e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 7 (approx. per word bound = -7.424, relative change = 2.541e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 8 (approx. per word bound = -7.410, relative change = 1.898e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 9 (approx. per word bound = -7.400, relative change = 1.410e-03)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 10 (approx. per word bound = -7.392, relative change = 1.057e-03)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, ever, made, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 11 (approx. per word bound = -7.386, relative change = 8.043e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 12 (approx. per word bound = -7.382, relative change = 6.199e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 13 (approx. per word bound = -7.378, relative change = 4.837e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 14 (approx. per word bound = -7.375, relative change = 3.829e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 15 (approx. per word bound = -7.373, relative change = 3.076e-04)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 16 (approx. per word bound = -7.371, relative change = 2.501e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 17 (approx. per word bound = -7.370, relative change = 2.048e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 18 (approx. per word bound = -7.368, relative change = 1.687e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 19 (approx. per word bound = -7.367, relative change = 1.397e-04)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 20 (approx. per word bound = -7.366, relative change = 1.160e-04)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 21 (approx. per word bound = -7.366, relative change = 9.714e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 22 (approx. per word bound = -7.365, relative change = 8.281e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 23 (approx. per word bound = -7.365, relative change = 7.208e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 24 (approx. per word bound = -7.364, relative change = 6.338e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 25 (approx. per word bound = -7.364, relative change = 5.648e-05)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 26 (approx. per word bound = -7.363, relative change = 5.088e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 27 (approx. per word bound = -7.363, relative change = 4.614e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 28 (approx. per word bound = -7.363, relative change = 4.164e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 29 (approx. per word bound = -7.362, relative change = 3.678e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 30 (approx. per word bound = -7.362, relative change = 3.158e-05)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 31 (approx. per word bound = -7.362, relative change = 2.701e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 32 (approx. per word bound = -7.362, relative change = 2.355e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 33 (approx. per word bound = -7.362, relative change = 2.098e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 34 (approx. per word bound = -7.362, relative change = 1.895e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 35 (approx. per word bound = -7.361, relative change = 1.699e-05)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 36 (approx. per word bound = -7.361, relative change = 1.546e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 37 (approx. per word bound = -7.361, relative change = 1.433e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 38 (approx. per word bound = -7.361, relative change = 1.345e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 39 (approx. per word bound = -7.361, relative change = 1.275e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 40 (approx. per word bound = -7.361, relative change = 1.233e-05)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 41 (approx. per word bound = -7.361, relative change = 1.218e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 42 (approx. per word bound = -7.361, relative change = 1.212e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 43 (approx. per word bound = -7.361, relative change = 1.237e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 44 (approx. per word bound = -7.361, relative change = 1.294e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 45 (approx. per word bound = -7.360, relative change = 1.367e-05)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 46 (approx. per word bound = -7.360, relative change = 1.419e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 47 (approx. per word bound = -7.360, relative change = 1.430e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 48 (approx. per word bound = -7.360, relative change = 1.386e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 49 (approx. per word bound = -7.360, relative change = 1.273e-05)

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

## Completing Iteration 50 (approx. per word bound = -7.360, relative change = 1.074e-05)

## Topic 1: much, know, littl, look, wish

## Topic 2: think, sister, come, way, mother

## Topic 3: must, feel, can, noth, soon

## Topic 4: make, day, first, happi, sir

## Topic 5: mrs, everi, well, ladi, hope

## Topic 6: miss, see, made, ever, father

## Topic 7: without, fanni, give, sure, room

## Topic 8: said, one, time, might, great

## Topic 9: will, now, say, never, good

## Topic 10: talk, last, better, brother, pleasur

## ....................................................................................................

## Completed E-Step (3 seconds).

## Completed M-Step.

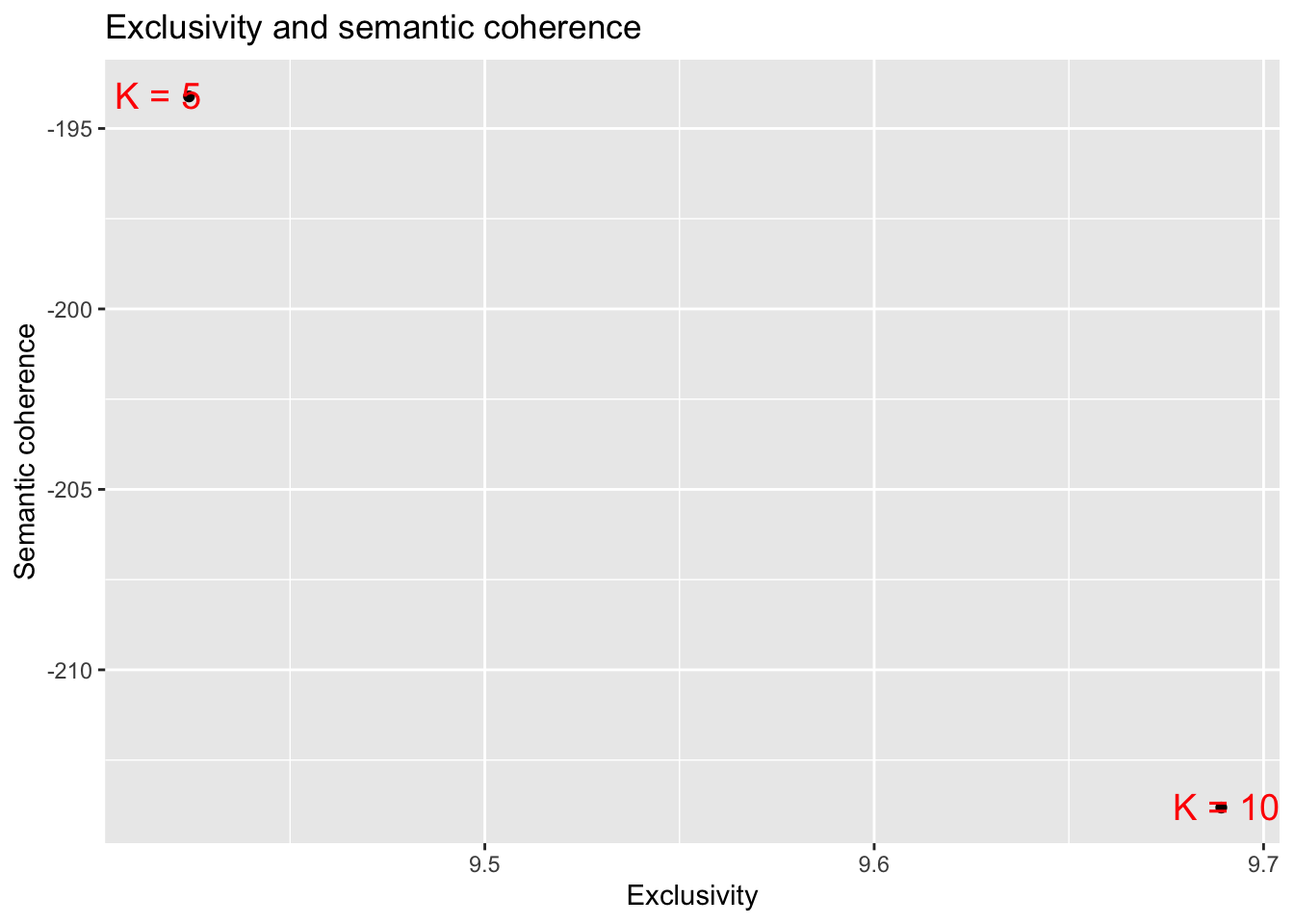

## Model Converged8.7.2.5.3 Evaluating models

Several metrics assess topic models’ performance: the held-out likelihood, residuals, semantic coherence, and exclusivity. Here we examine the relationship between semantic coherence and exclusivity to understand the trade-off involved in selecting K.

Semantic coherence: high probability words for a topic co-occur in documents

Exclusivity: keywords of one topic are not likely to appear as keywords in other topics.

In Roberts et al. (2014), the authors introduce the semantic coherence metric of Mimno et al. (2011) as a criterion for topic‐model selection. They observe, however, that maximizing semantic coherence alone can be trivial—by allowing a small number of topics to capture the most frequent words. To counteract this, they also develop an exclusivity measure.

This exclusivity measure incorporates word‐frequency information via the FREX metric (implemented in

calcfrex), with a default weight of 0.7 placed on exclusivity.

test_res$results %>%

unnest(c(K, exclus, semcoh)) %>%

select(K, exclus, semcoh) %>%

mutate(K = as.factor(K)) %>%

ggplot(aes(x = exclus, y = semcoh)) +

geom_point() +

geom_text(

label = glue("K = {test_res$results$K}"),

size = 5,

color = "red",

position = position_jitter(width = 0.05, height = 0.05)

) +

labs(

x = "Exclusivity",

y = "Semantic coherence",

title = "Exclusivity and semantic coherence"

)

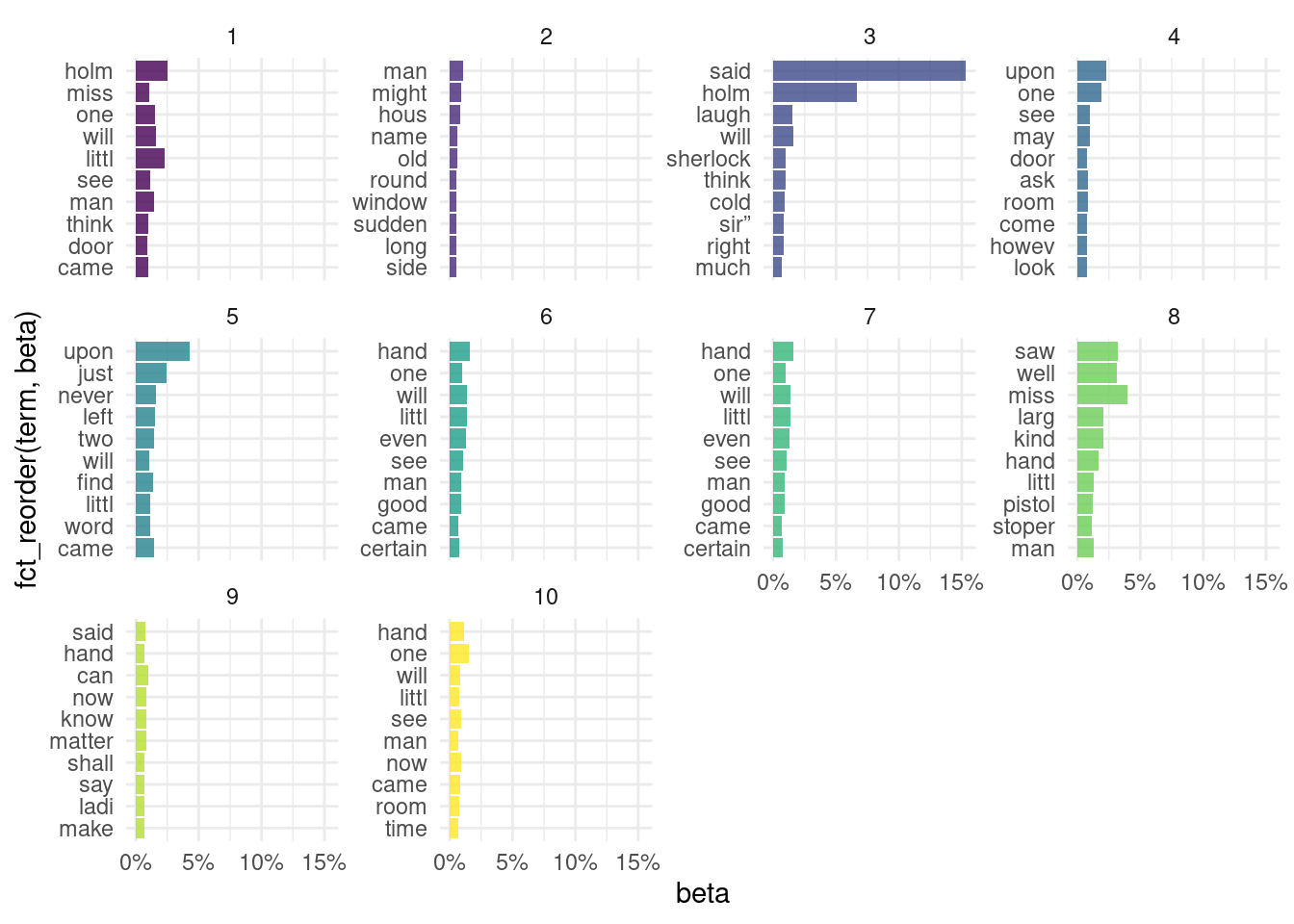

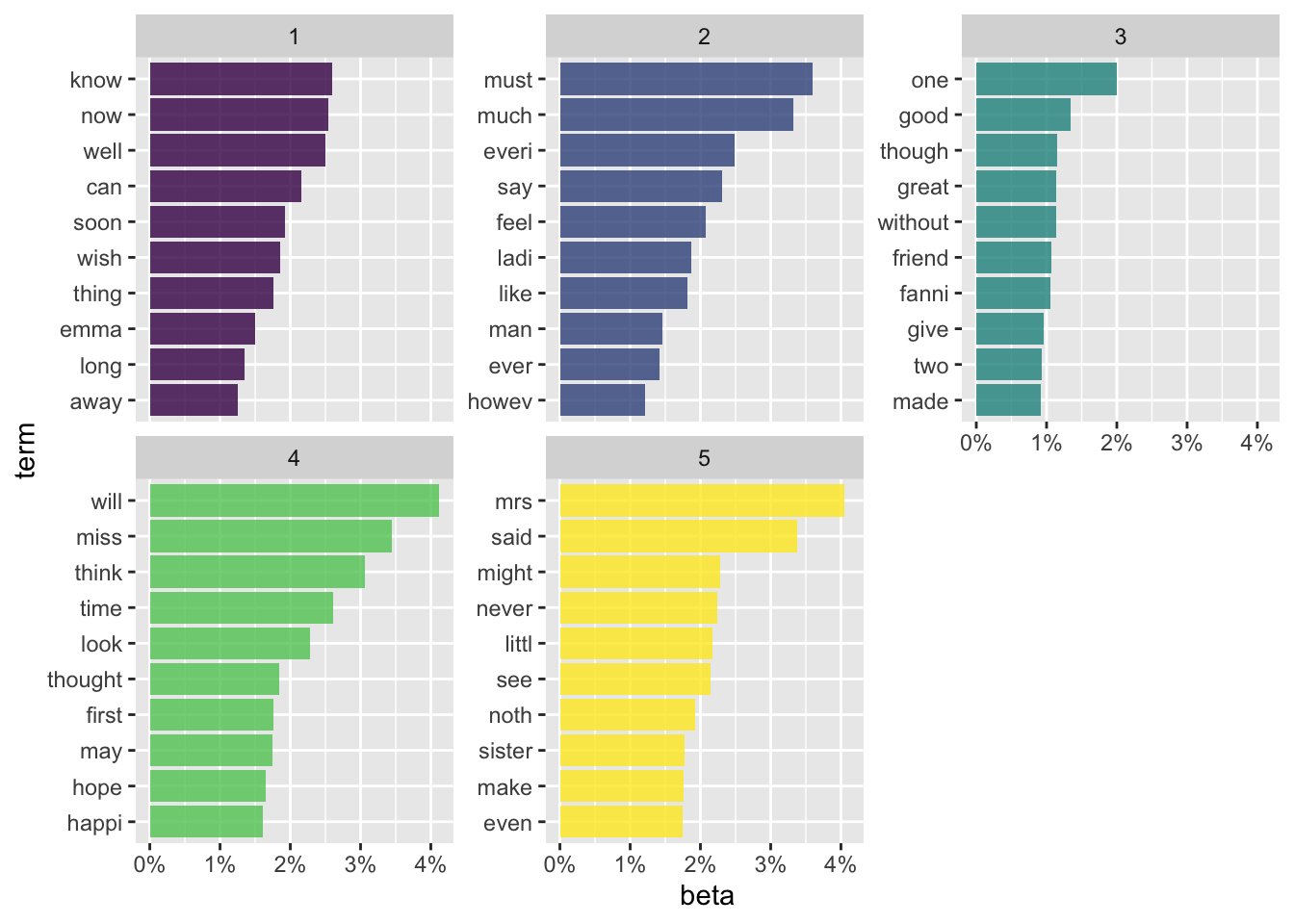

8.7.2.5.4 Finalize

final_stm <- stm(

dtm$documents, dtm$vocab,

K = 5,

prevalence = ~book,

data = dtm$meta,

init.type = "Spectral",

max.em.its = 75,

seed = 1234567,

verbose = FALSE

)8.7.2.5.5 Explore the results

- Using the

stmpackage.

plot(final_stm)

- Using ggplot2

In LDA distribution, \(\alpha\) represents document-topic density and \(\beta\) represents topic-word density.

# tidy

tidy_stm <- tidy(final_stm)

# top terms

tidy_stm %>%

group_by(topic) %>%

top_n(10, beta) %>%

ungroup() %>%

mutate(term = tidytext::reorder_within(term, beta, topic)) %>%

ggplot(aes(term, beta, fill = as.factor(topic))) +

geom_col(alpha = 0.8, show.legend = FALSE) +

facet_wrap(~topic, scales = "free_y") +

coord_flip() +

tidytext::scale_x_reordered() +

scale_y_continuous(labels = scales::percent) +

scale_fill_viridis_d()

8.8 References

8.8.1 Books

An Introduction to Statistical Learning - with Applications in R (2013) by Gareth James, Daniela Witten, Trevor Hastie, Robert Tibshirani. Springer: New York. Amazon or free PDF.

Hands-On Machine Learning with R (2020) by Bradley Boehmke & Brandon Greenwell. CRC Press or Amazon

Applied Predictive Modeling (2013) by Max Kuhn and Kjell Johnson. Springer: New York. Amazon

Feature Engineering and Selection: A Practical Approach for Predictive Models (2019) by Kjell Johnson and Max Kuhn. Taylor & Francis. Amazon or free HTML.

Tidy Modeling with R (2020) by Max Kuhn and Julia Silge (work-in-progress)

8.8.2 Lecture slides

An introduction to supervised and unsupervised learning (2015) by Susan Athey and Guido Imbens

Introduction Machine Learning with the Tidyverse by Alison Hill

8.8.3 Blog posts

- “Using the recipes package for easy pre-processing” by Rebecca Barter