7 Semi-structured data

7.1 Setup

# Install packages

if (!require("pacman")) install.packages("pacman")## Loading required package: pacman

pacman::p_load(tidyverse, # tidyverse pkgs including purrr

furrr, # parallel processing

tictoc, # performance test

tcltk, # GUI for choosing a dir path

tidyjson, # tidying JSON files

XML, # parsing XML

rvest, # parsing HTML

jsonlite, # downloading JSON file from web

glue, # pasting string and objects

xopen, # opepn URLs in browser

urltools, # regex and url parsing

here) # computational reproducibility

## Install the current development version from GitHub

devtools::install_github("jaeyk/tidytweetjson", dependencies = TRUE) ; library(tidytweetjson)## Skipping install of 'tidytweetjson' from a github remote, the SHA1 (464a7799) has not changed since last install.

## Use `force = TRUE` to force installation7.2 The Big Picture

- Automating the process of turning semi-structured data (input) into structured data (output)

7.3 What is semi-structured data?

Semi-structured data is a form of structured data that does not obey the tabular structure of data models associated with relational databases or other forms of data tables, but nonetheless contains tags or other markers to separate semantic elements and enforce hierarchies of records and fields within the data. Therefore, it is also known as a self-describing structure. - Wikipedia

- Examples: HTML (e.g., websites), XML (e.g., government data), JSON (e.g., social media API)

Below is how JSON (tweet) looks like.

A tree-like structure

Keys and values (key: value)

{ “created_at”: “Thu Apr 06 15:24:15 +0000 2017”, “id_str”: “850006245121695744”, “text”: “1/ Today we019re sharing our vision for the future of the Twitter API platform!://t.co/XweGngmxlP”, “user”: { “id”: 2244994945, “name”: “Twitter Dev”, “screen_name”: “TwitterDev”, “location”: “Internet”, “url”: “https:\/\/dev.twitter.com\/”, “description”: “Your official source for Twitter Platform news, updates & events. Need technical help? Visit https:\/\/twittercommunity.com\/ 3280f #TapIntoTwitter” } }

-

Why should we care about semi-structured data?

- Because this is what the data frontier looks like: # of unstructured data > # of semi-structured data > # of structured data

- There are easy and fast ways to turn semi-structured data into structured data (ideally in a tidy format) using R, Python, and command-line tools. See my own examples (tidyethnicnews and tidytweetjson).

7.4 Workflow

Import/connect to a semi-structured file using

rvest,jsonlite,xml2,pdftools,tidyjson, etc.Define target elements in a single file and extract them

readrpackage providersparse_functions that are useful for vector parsing.stringrpackage for string manipulations (e.g., using regular expressions in a tidy way). Quite useful for parsing PDF files (see this example).rvestpackage for parsing HTML (R equivalent tobeautiful soupin Python)tidyjsonpackage for parsing JSON data

Create a list of files (in this case URLs) to parse

Write a parsing function

Automate parsing process

7.5 HTML/CSS: web scraping

Let’s go back to the example we covered in the earlier chapter of the book.

url_list <- c(

"https://en.wikipedia.org/wiki/University_of_California,_Berkeley",

"https://en.wikipedia.org/wiki/Stanford_University",

"https://en.wikipedia.org/wiki/Carnegie_Mellon_University",

"https://DLAB"

)- Step 1: Inspection

Examine the Berkeley website so that we could identify a node that indicates the school’s motto. Then, if you’re using Chrome, draw your interest elements, then right click > inspect > copy full xpath.

url <- "https://en.wikipedia.org/wiki/University_of_California,_Berkeley"

download.file(url, destfile = "scraped_page.html", quiet = TRUE)

target <- read_html("scraped_page.html")

# If you want character vector output

target %>%

html_nodes(xpath = "/html/body/div[3]/div[3]/div[5]/div[1]/table[1]") %>%

html_text()

# If you want table output

target %>%

html_nodes(xpath = "/html/body/div[3]/div[3]/div[5]/div[1]/table[1]") %>%

html_table()- Step 2: Write a function

I highly recommend writing your function working slowly by wrapping the function with slowly().

get_table_from_wiki <- function(url){

download.file(url, destfile = "scraped_page.html", quiet = TRUE)

target <- read_html("scraped_page.html")

table <- target %>%

html_nodes(xpath = "/html/body/div[3]/div[3]/div[5]/div[1]/table[1]") %>%

html_table()

return(table)

}- Step 3: Test

get_table_from_wiki(url_list[[2]])- Step 4: Automation

map(url_list, get_table_from_wiki)- Step 5: Error handling

7.6.2 Social media API (JSON)

7.6.2.1 Objectives

Review question

In the previous session, we learned the difference between semi-structured data and structured data. Can anyone tell us the difference between them?

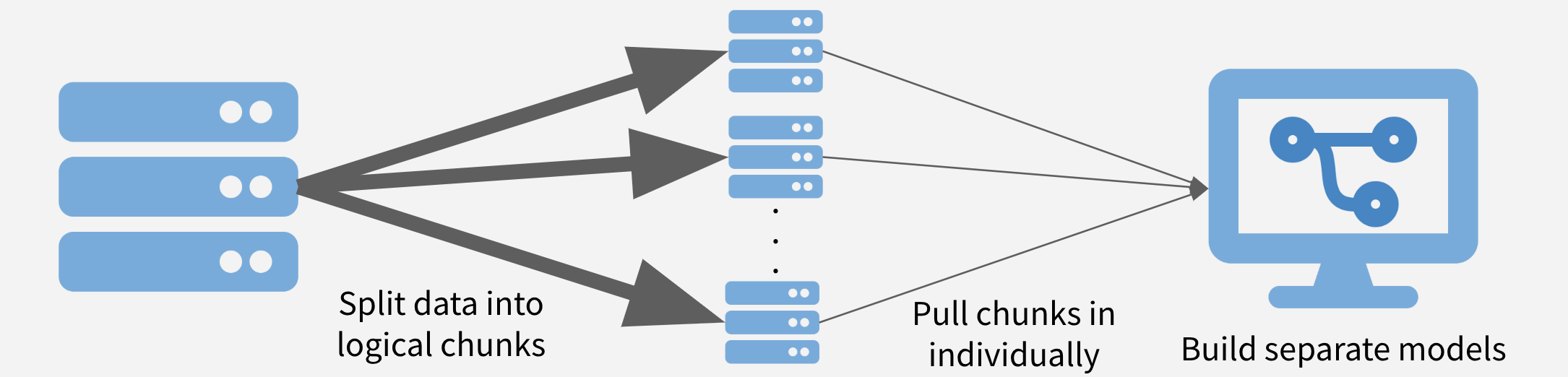

7.6.2.2 The big picture for digital data collection

Input: semi-structured data

Output: structured data

Process:

Getting target data from a remote server

Parsing the target data your laptop/database

Database (push-parse): Push the large target data to a database, then explore, select, and filter it. If you are interested in using this option, check out my SQL for R Users workshop.

But what exactly is this target data?

When you scrape websites, you mostly deal with HTML (defines a structure of a website), CSS (its style), and JavaScript (its dynamic interactions).

When you access social media data through API, you deal with either XML or JSON (major formats for storing and transporting data; they are light and flexible).

XML and JSON have tree-like (nested; a root and branches) structures and keys and values (or elements and attributes).

If HTML, CSS, and JavaScript are storefronts, then XML and JSON are warehouses.

7.6.2.3 Opportunities and challenges for parsing social media data

This explanation draws on Pablo Barbara’s LSE social media workshop slides.

Basic information

What is an API?: An interface (you can think of it as something akin to a restaurant menu. API parameters are API menu items.)

REST (Representational state transfer) API: static information (e.g., user profiles, list of followers and friends)

Streaming API: dynamic information (e.g, new tweets)

Why should we care?

API is the new data frontier. ProgrammableWeb shows that there are more than 24,046 APIs as of April 1, 2021.

Big and streaming (real-time) data

High-dimensional data (e.g., text, image, video, etc.)

Lots of analytic opportunities (e.g., time-series, network, spatial analysis)

Also, this type of data has many limitations (external validity, algorithmic bias, etc).

Think about taking the API + approach (i.e., API not replacing but augmenting traditional data collection)

How API works

Request (you form a request URL) <-> Response (API responses to your request by sending you data usually in JSON format)

API Statuses

Twitter API is still widely accessible (v2

In January 2021, Twitter introduced the academic Twitter API that allows generous access to Twitter’s historical data for academic researchers

Many R packages exist for the Twitter API: rtweet (REST + streaming), tweetscores (REST), streamR (streaming)

Some notable limitations. If Twitter users don’t share their tweets’ locations (e.g., GPS), you can’t collect them.

The following comments draw on Alexandra Siegel’s talk on “Collecting and Analyzing Social Media Data” given at Montréal Methods Workshops.

Facebook API access has become constrained since the 2016 U.S. election.

Exception: Social Science One.

Also, check out Crowdtangle for collecting public FB page data

Using FB ads is still a popular method, especially among scholars studying developing countries.

YouTube API: generous access + (computer-generated) transcript in many languages

Instragram API: Data from public accounts are available.

Reddit API: Well-annotated text data suitable for machine learning

Upside

Web scraping (Wild Wild West) <> API (Big Gated Garden)

You have legal but limited access to (growing) big data that can be divided into text, image, and video and transformed into cross-sectional (geocodes), longitudinal (timestamps), and historical event data (hashtags). See Zachary C. Steinert-Threlkeld’s 2020 APSA Short Course Generating Event Data From Social Media.

Social media data are also well-organized, managed, and curated data. It’s easy to navigate because XML and JSON have keys and values. If you find keys, you will find observations you look for.

Downside

Rate-limited.

If you want to access more and various data than those available, you need to pay for premium access.